What's in the RedPajama-Data-1T LLM training set

Plus: Data analysis with SQLite and Python for PyCon 2023

In this newsletter:

What's in the RedPajama-Data-1T LLM training set

Data analysis with SQLite and Python for PyCon 2023

Plus 8 links and 1 quotation

What's in the RedPajama-Data-1T LLM training set - 2023-04-17

RedPajama is "a project to create leading open-source models, starts by reproducing LLaMA training dataset of over 1.2 trillion tokens". It's a collaboration between Together, Ontocord.ai, ETH DS3Lab, Stanford CRFM, Hazy Research, and MILA Québec AI Institute.

They just announced their first release: RedPajama-Data-1T, a 1.2 trillion token dataset modelled on the training data described in the original LLaMA paper.

The full dataset is 2.67TB, so I decided not to try and download the whole thing! Here's what I've figured out about it so far.

How to download it

The data is split across 2,084 different files. These are listed in a plain text file here:

https://data.together.xyz/redpajama-data-1T/v1.0.0/urls.txt

The dataset card suggests you could download them all like this - assuming you have 2.67TB of disk space and bandwith to spare:

wget -i https://data.together.xyz/redpajama-data-1T/v1.0.0/urls.txt

I prompted GPT-4 a few times to write a quick Python script to run a HEAD request against each URL in that file instead, in order to collect the Content-Length and calculate the total size of the data. My script is at the bottom of this post.

I then processed the size data into a format suitable for loading into Datasette Lite.

Exploring the size data

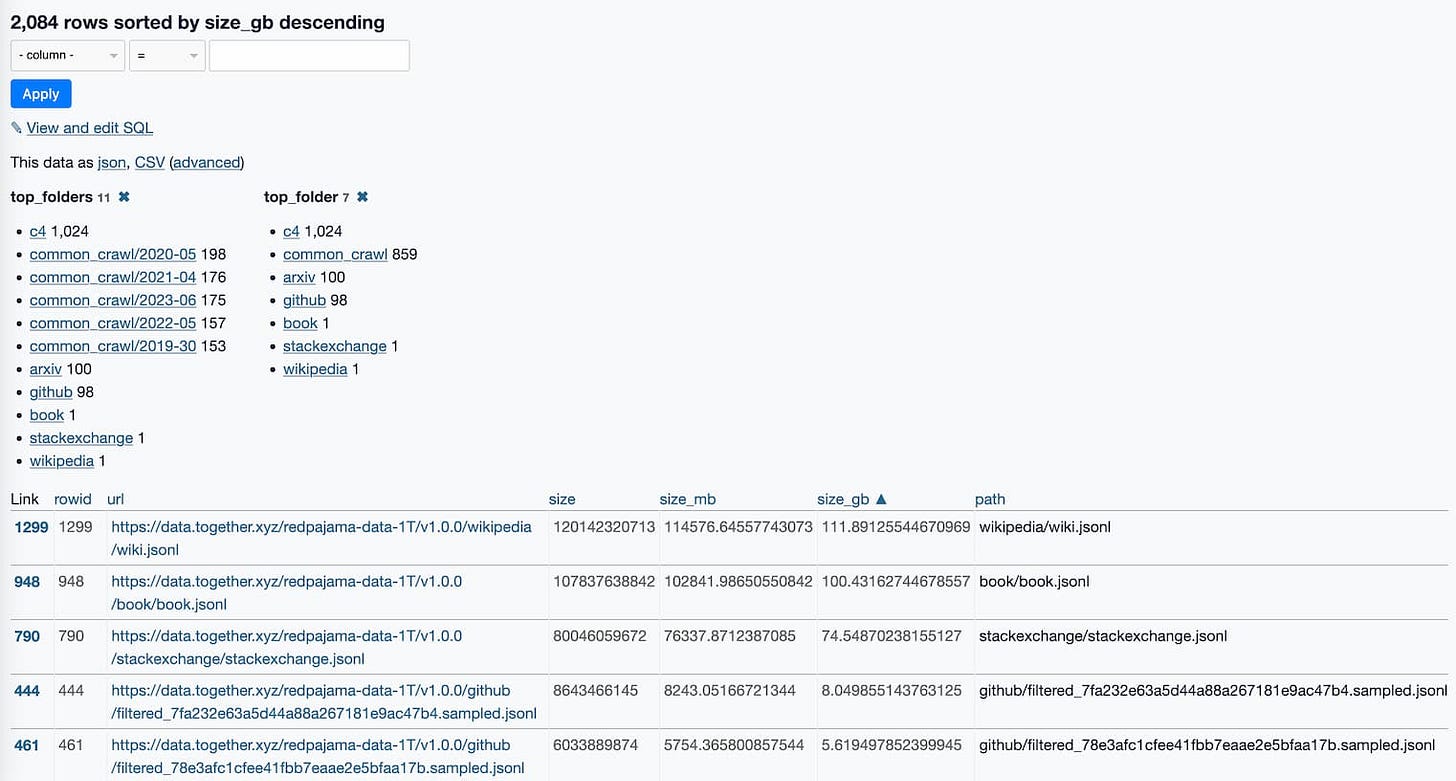

Here's a link to a Datasette Lite page showing all 2,084 files, sorted by size and with some useful facets.

This is already revealing a lot about the data.

The top_folders facet inspired me to run this SQL query:

select

top_folders,

cast (sum(size_gb) as integer) as total_gb,

count(*) as num_files

from raw

group by top_folders

order by sum(size_gb) descHere are the results:

top_folders total_gb num_files c4 806 1024 common_crawl/2023-06 288 175 common_crawl/2020-05 286 198 common_crawl/2021-04 276 176 common_crawl/2022-05 251 157 common_crawl/2019-30 237 153 github 212 98 wikipedia 111 1 book 100 1 arxiv 87 100 stackexchange 74 1

There's a lot of Common Crawl data in there!

The RedPajama announcement says:

CommonCrawl: Five dumps of CommonCrawl, processed using the CCNet pipeline, and filtered via several quality filters including a linear classifier that selects for Wikipedia-like pages.

C4: Standard C4 dataset

It looks like they used CommonCrawl from 5 different dates, from 2019-30 (30? That's not a valid month - looks like it's a week number) to 2022-05. I wonder if they de-duplicated content within those different crawls?

C4 is "a colossal, cleaned version of Common Crawl's web crawl corpus" - so yet another copy of Common Crawl, cleaned in a different way.

I downloaded the first 100MB of that 100GB book.jsonl file - the first 300 rows in it are all full-text books from Project Gutenberg, starting with The Bible Both Testaments King James Version from 1611.

The data all appears to be in JSONL format - newline-delimited JSON. Different files I looked at had different shapes, though a common pattern was a "text" key containing the text and a "meta" key containing a dictionary of metadata.

For example, the first line of books.jsonl looks like this (after pretty-printing using jq):

{

"meta": {

"short_book_title": "The Bible Both Testaments King James Version",

"publication_date": 1611,

"url": "http://www.gutenberg.org/ebooks/10"

},

"text": "\n\nThe Old Testament of the King James Version of the Bible\n..."

}There are more details on the composition of the dataset in the dataset card.

My Python script

I wrote a quick Python script to do the next best thing: run a HEAD request against each URL to figure out the total size of the data.

I prompted GPT-4 a few times, and came up with this:

import httpx

from tqdm import tqdm

async def get_sizes(urls):

sizes = {}

async def fetch_size(url):

try:

response = await client.head(url)

content_length = response.headers.get('Content-Length')

if content_length is not None:

return url, int(content_length)

except Exception as e:

print(f"Error while processing URL '{url}': {e}")

return url, 0

async with httpx.AsyncClient() as client:

# Create a progress bar using tqdm

with tqdm(total=len(urls), desc="Fetching sizes", unit="url") as pbar:

# Use asyncio.as_completed to process results as they arrive

coros = [fetch_size(url) for url in urls]

for coro in asyncio.as_completed(coros):

url, size = await coro

sizes[url] = size

# Update the progress bar

pbar.update(1)

return sizesI pasted this into python3 -m asyncio - the -m asyncio flag ensures the await statement can be used in the interactive interpreter - and ran the following:

>>> urls = httpx.get("https://data.together.xyz/redpajama-data-1T/v1.0.0/urls.txt").text.splitlines()

>>> sizes = await get_sizes(urls)

Fetching sizes: 100%|██████████████████████████████████████| 2084/2084 [00:08<00:00, 256.60url/s]

>>> sum(sizes.values())

2936454998167Then I added the following to turn the data into something that would work with Datasette Lite:

output = []

for url, size in sizes.items():

path = url.split('/redpajama-data-1T/v1.0.0/')[1]

output.append({

"url": url,

"size": size,

"size_mb": size / 1024 / 1024,

"size_gb": size / 1024 / 1024 / 1024,

"path": path,

"top_folder": path.split("/")[0],

"top_folders": path.rsplit("/", 1)[0],

})

open("/tmp/sizes.json", "w").write(json.dumps(output, indent=2))I pasted the result into a Gist.

Data analysis with SQLite and Python for PyCon 2023 - 2023-04-20

I'm at PyCon 2023 in Salt Lake City this week.

Yesterday afternoon I presented a three hour tutorial on Data Analysis with SQLite and Python. I think it went well!

I covered basics of using SQLite in Python through the sqlite3 module in the standard library, and then expanded that to demonstrate sqlite-utils, Datasette and even spent a bit of time on Datasette Lite.

One of the things I learned from the Carpentries teacher training a while ago is that a really great way to run a workshop like this is to have detailed, extensive notes available and then to work through those, slowly, at the front of the room.

I don't know if I've quite nailed the "slowly" part, but I do find that having an extensive pre-prepared handout really helps keep things on track. It also gives attendees a chance to work at their own pace.

You can find the full 9-page workshop handout I prepared here:

sqlite-tutorial-pycon-2023.readthedocs.io

I built the handout site using Sphinx and Markdown, with myst-parser and sphinx_rtd_theme and hosted on Read the Docs. The underlying GitHub repository is here:

github.com/simonw/sqlite-tutorial-pycon-2023

I'm hoping to recycle some of the material from the tutorial to extend Datasette's official tutorial series - I find that presenting workshops is an excellent opportunity to bulk up Datasette's own documentation.

The Advanced SQL section in particular would benefit from being extended. It covers aggregations, subqueries, CTEs, SQLite's JSON features and window functions - each of which could easily be expanded into their own full tutorial.

Link 2023-04-16 How I Used Stable Diffusion and Dreambooth to Create A Painted Portrait of My Dog: I like posts like this that go into detail in terms of how much work it takes to deliberately get the kind of result you really want using generative AI tools. Jake Dahn trained a Dreambooth model from 40 photos of Queso - his photogenic Golden Retriever - using Replicate, then gathered the prompts from ten images he liked on Lexica and generated over 1,000 different candidate images, picked his favourite, used Draw Things img2img resizing to expand the image beyond the initial crop, then Automatic1111 inpainting to tweak the ears, then Real-ESRGAN 4x+ to upscale for the final print.

Link 2023-04-17 MiniGPT-4: An incredible project with a poorly chosen name. A team from King Abdullah University of Science and Technology in Saudi Arabia combined Vicuna-13B (a model fine-tuned on top of Facebook's LLaMA) with the BLIP-2 vision-language model to create a model that can conduct ChatGPT-style conversations around an uploaded image. The demo is very impressive, and the weights are available to download - 45MB for MiniGPT-4, but you'll need the much larger Vicuna and LLaMA weights as well.

Link 2023-04-17 Latest Twitter search results for "as an AI language model": Searching for "as an AI language model" on Twitter reveals hundreds of bot accounts which are clearly being driven by GPT models and have been asked to generate content which occasionally trips the ethical guidelines trained into the OpenAI models. If Twitter still had an affordable search API someone could do some incredible disinformation research on top of this, looking at which accounts are implicated, what kinds of things they are tweeting about, who they follow and retweet and so-on.

Link 2023-04-17 RedPajama, a project to create leading open-source models, starts by reproducing LLaMA training dataset of over 1.2 trillion tokens: With the amount of projects that have used LLaMA as a foundation model since its release two months ago - despite its non-commercial license - it's clear that there is a strong desire for a fully openly licensed alternative. RedPajama is a collaboration between Together, Ontocord.ai, ETH DS3Lab, Stanford CRFM, Hazy Research, and MILA Québec AI Institute aiming to build exactly that. Step one is gathering the training data: the LLaMA paper described a 1.2 trillion token training set gathered from sources that included Wikipedia, Common Crawl, GitHub, arXiv, Stack Exchange and more. RedPajama-Data-1T is an attempt at recreating that training set. It's now available to download, as 2,084 separate multi-GB jsonl files - 2.67TB total. Even without a trained model, this is a hugely influential contribution to the world of open source LLMs. Any team looking to build their own LLaMA from scratch can now jump straight to the next stage, training the model.

Link 2023-04-19 LLaVA: Large Language and Vision Assistant: Yet another multi-modal model combining a vision model (pre-trained CLIP ViT-L/14) and a LLaMA derivative model (Vicuna). The results I get from their demo are even more impressive than MiniGPT-4. Also includes a new training dataset, LLaVA-Instruct-150K, derived from GPT-4 and subject to the same warnings about the OpenAI terms of service.

Link 2023-04-19 Inside the secret list of websites that make AI chatbots sound smart: Washington Post story digging into the C4 dataset - Colossal Clean Crawled Corpus, a filtered version of Common Crawl that's often used for training large language models. They include a neat interactive tool for searching a domain to see if it's included - TIL that simonwillison.net is the 106,649th ranked site in C4 by number of tokens, 189,767 total - 0.0001% of the total token volume in C4.

Link 2023-04-19 Stability AI Launches the First of its StableLM Suite of Language Models: 3B and 7B base models, with 15B and 30B are on the way. CC BY-SA-4.0. "StableLM is trained on a new experimental dataset built on The Pile, but three times larger with 1.5 trillion tokens of content. We will release details on the dataset in due course."

Link 2023-04-21 Bard now helps you code: Google have enabled Bard's code generation abilities - these were previously only available through jailbreaking. It's pretty good - I got it to write me code to download a CSV file and insert it into a SQLite database - though when I challenged it to protect against SQL injection it hallucinated a non-existent "cursor.prepare()" method. Generated code can be exported to a Colab notebook with a click.

Quote 2023-04-21

The AI Writing thing is just pivot to video all over again, a bunch of dead-eyed corporate types willing to listen to any snake oil salesman who offers them higher potential profits. It'll crash in a year but scuttle hundreds of livelihoods before it does.