ospeak: a CLI tool for speaking text in the terminal via OpenAI

Plus more new APIs from today's OpenAI DevDay event

In this newsletter:

ospeak: a CLI tool for speaking text in the terminal via OpenAI

DALL-E 3, GPT4All, PMTiles, sqlite-migrate, datasette-edit-schema

Plus 12 links and 2 quotations and 1 TIL

ospeak: a CLI tool for speaking text in the terminal via OpenAI - 2023-11-07

I attended OpenAI DevDay today, the first OpenAI developer conference. It was a lot. They released a bewildering array of new API tools, which I'm just beginning to wade my way through fully understanding.

My preferred way to understand a new API is to build something with it, and in my experience the easiest and fastest things to build are usually CLI utilities.

I've been enjoying the new ChatGPT voice interface a lot, so I was delighted to see that OpenAI today released a text-to-speech API that uses the same model.

My first new tool is ospeak, a CLI utility for piping text through that tool.

ospeak

You can install ospeak like this. I've only tested in on macOS, but it might well work on Linux and Windows as well:

pipx install ospeakSince it uses the OpenAI API you'll need an API key. You can either pass that directly to the tool:

ospeak "Hello there" --token="sk-..."Or you can set it as an environment variable so you don't have to enter it multiple times:

export OPENAI_API_KEY=sk-...

ospeak "Hello there"

Now you can call it and your computer will speak whatever you pass to it!

ospeak "This is really quite a convincing voice"OpenAI currently have six voices: alloy, echo, fable, onyx, nova and shimmer. The command defaults to alloy, but you can specify another voice by passing -v/--voice:

ospeak "This is a different voice" -v nova If you pass the special value -v all it will say the same thing in each voice, prefixing with the name of the voice:

ospeak "This is a demonstration of my voice." -v allHere's a recording of the output from that:

https://static.simonwillison.net/static/2023/all-voices.m4a

You can also set the speed - from 0.25 (four times slower than normal) to 4.0 (four times faster). I find 2x is fast but still understandable:

ospeak "This is a fast voice" --speed 2.0Finally, you can save the output to a .mp3 or .wav file instead of speaking it through the speakers, using the -o/--output option:

ospeak "This is saved to a file" -o output.mp3That's pretty much all there is to it. There are a few more details in the README.

The source code was adapted from an example in OpenAI's documentation.

The real fun is when you combine it with llm, to pipe output from a language model directly into the tool. Here's how to have your computer give a passionate speech about why you should care about pelicans:

llm -m gpt-4-turbo \

"A short passionate speech about why you should care about pelicans" \

| ospeak -v novaHere's what that gave me (transcript here):

https://static.simonwillison.net/static/2023/pelicans.m4a

I thoroughly enjoy how using text-to-speech like this genuinely elevates an otherwise unexciting piece of output from an LLM. This speech engine really is very impressive.

LLM 0.12 for gpt-4-turbo

I upgraded LLM to support the newly released GPT 4.0 Turbo model - an impressive beast which is 1/3 the price of GPT-4 (technically 3x cheaper for input tokens and 2x cheaper for output) and supports a huge 128,000 tokens, up from 8,000 for regular GPT-4.

You can try that out like so:

pipx install llm

llm keys set openai

# Paste OpenAI API key here

llm -m gpt-4-turbo "Ten great names for a pet walrus"

# Or a shortcut:

llm -m 4t "Ten great names for a pet walrus"Here's a one-liner that summarizes the Hacker News discussion about today's OpenAI announcements using the new model (and taking advantage of its much longer token limit):

curl -s "https://hn.algolia.com/api/v1/items/38166420" | \

jq -r 'recurse(.children[]) | .author + ": " + .text' | \

llm -m gpt-4-turbo 'Summarize the themes of the opinions expressed here,

including direct quotes in quote markers (with author attribution) for each theme.

Fix HTML entities. Output markdown. Go long.'Example output here. I adapted that from my Claude 2 version, but I found I had to adjust the prompt a bit to get GPT-4 Turbo to output quotes in the manner I wanted.

I also added support for a new -o seed 1 option for the OpenAI models, which passes a seed integer that more-or-less results in reproducible outputs - another new feature announced today.

So much more to explore

I've honestly hardly even begun to dig into the things that were released today. A few of the other highlights:

GPT-4 vision! You can now pass images to the GPT-4 API, in the same way as ChatGPT has supported for the past few weeks. I have so many things I want to build on top of this.

JSON mode: both 3.5 and 4.0 turbo can now reliably produce valid JSON output. Previously they could produce JSON but would occasionally make mistakes - this mode makes mistakes impossible by altering the token stream as it is being produced (similar to Llama.cpp grammars).

Function calling got some big upgrades, the most important of which is that you can now be asked by the API to execute multiple functions in parallel.

Assistants. This is the big one. You can now define custom GPTs (effectively a custom system prompt, set of function calls and collection of documents for use with Retrieval Augmented Generation) using the ChatGPT interface or via the API, then share those with other people.... or use them directly via the API. This makes building simple RAG systems trivial, and you can also enable both Code Interpreter and Bing Browse mode as part of your new assistant. It's a huge recipe for prompt injection, but it also cuts out a lot of the work involved in building a custom chatbot.

Honestly today was pretty overwhelming. I think it's going to take us all months to fully understand the new capabilities we have around the OpenAI family of models.

It also feels like a whole bunch of my potential future side projects just dropped from several weeks of work to several hours.

DALL-E 3, GPT4All, PMTiles, sqlite-migrate, datasette-edit-schema - 2023-10-30

I wrote a lot this week. I also did some fun research into new options for self-hosting vector maps and pushed out several new releases of plugins.

On the blog

Now add a walrus: Prompt engineering in DALL-E 3 talked about my explorations of the new DALL-E 3 image generation model, including some reverse engineering showing how OpenAI prompt engineered ChatGPT to pass generate its own prompts for DALL-E 3. And a lot of pictures of pelicans. I also wrote a TIL about the CSS grids I used in that post.

In Execute Jina embeddings with a CLI using llm-embed-jina I released a new plugin to run the new Jina AI 8K text embedding model using my LLM command-line tool.

Embeddings: What they are and why they matter is the big write-up of my talk about embeddings from PyBay this year. This has received a lot of traffic, presumably because it provides one of the more accessible answers to the question "what are embeddings?".

PMTiles and MapLibre GL

I saw a post about Protomaps on Hacker News. It's absolutely fantastic technology.

The Protomaps PMTiles file format lets you bundle together vector tiles in a single file which is designed to be queried using HTTP range header requests.

This means you can drop a single 107GB file on cloud hosting and use it to efficiently serve vector maps to clients, fetching just the data they need for the current map area.

Even better than that, you can create your own subset of the larger map covering just the area you care about.

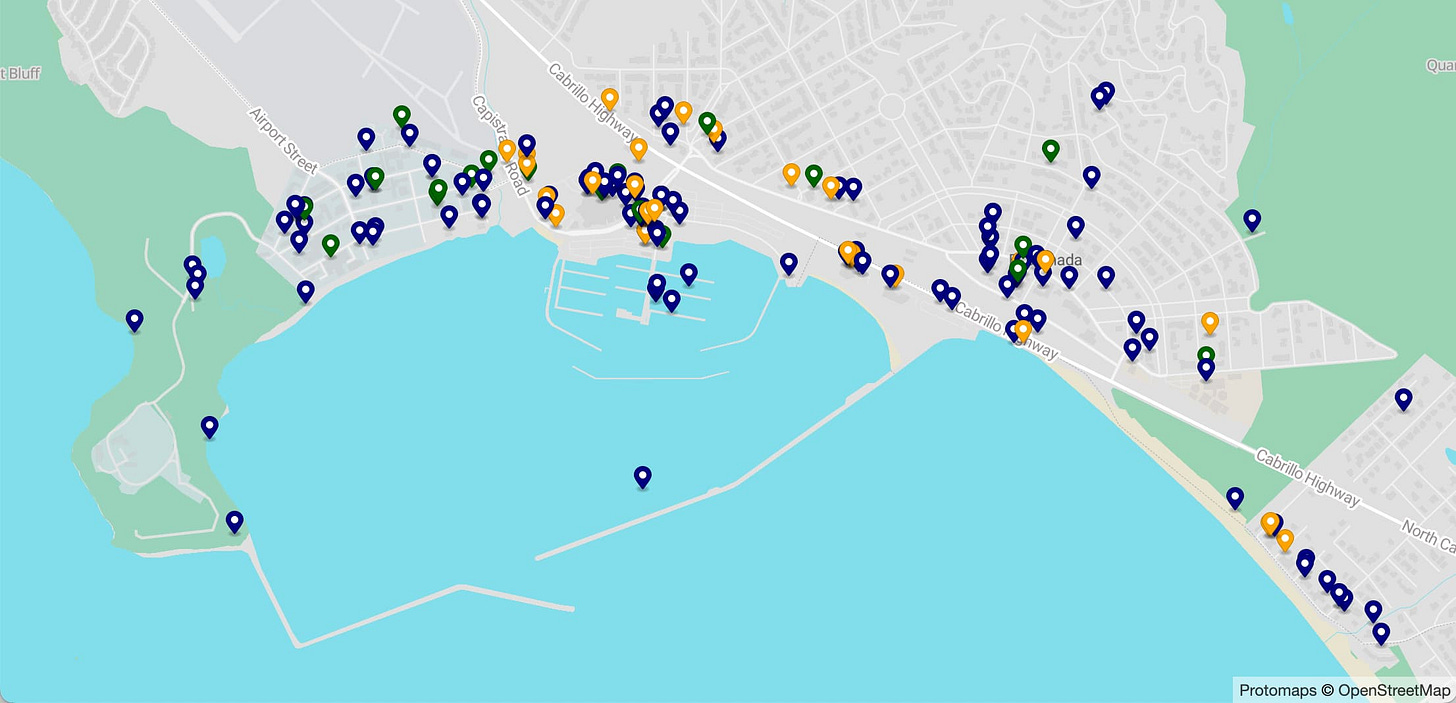

I tried this out against my hometown of Half Moon Bay ond get a building-outline-level vector map for the whole town in just a 2MB file!

You can see the result (which also includes business listing markers from Overture maps) at simonw.github.io/hmb-map.

Lots more details of how I built this, including using Vite as a build tool and the MapLibre GL JavaScript library to serve the map, in my TIL Serving a custom vector web map using PMTiles and maplibre-gl.

I'm so excited about this: we now have the ability to entirely self-host vector maps of any location in the world, using openly licensed data, without depending on anything other than our own static file hosting web server.

llm-gpt4all

This was a tiny release - literally a one line code change - with a huge potential impact.

Nomic AI's GPT4All is a really cool project. They describe their focus as "a free-to-use, locally running, privacy-aware chatbot. No GPU or internet required." - they've taken llama.cpp (and other libraries) and wrapped them in a much nicer experience, complete with Windows, macOS and Ubuntu installers.

Under the hood it's mostly Python, and Nomic have done a fantastic job releasing that Python core as an installable Python package - meaning you can literally pip install gpt4all to get almost everything you need to run a local language model!

Unlike alternative Python libraries MLC and llama-cpp-python, Nomic have done the work to publish compiled binary wheels to PyPI... which means pip install gpt4all works without needing a compiler toolchain or any extra steps!

My LLM tool has had a llm-gpt4all plugin since I first added alternative model backends via plugins in July. Unfortunately, it spat out weird debugging information that I had been unable to hide (a problem that still affects llm-llama-cpp).

Nomic have fixed this!

As a result, llm-gpt4all is now my recommended plugin for getting started running local LLMs:

pipx install llm

llm install llm-gpt4all

llm -m mistral-7b-instruct-v0 "ten facts about pelicans"The latest plugin can also now use the GPU on macOS, a key feature of Nomic's big release in September.

sqlite-migrate

sqlite-migrate is my plugin that adds a simple migration system to sqlite-utils, for applying changes to a database schema in a controlled, repeatable way.

Alex Garcia spotted a bug in the way it handled multiple migration sets with overlapping migration names, which is now fixed in sqlite-migrate 0.1b0.

Ironically the fix involved changing the schema of the _sqlite_migrations table used to track which migrations have been applied... which is the one part of the system that isn't itself managed by its own migration system! I had to implement a conditional check instead that checks if the table needs to be updated.

A recent thread about SQLite on Hacker News included a surprising number of complaints about the difficulty of running migrations, due to the lack of features of the core ALTER TABLE implementation.

The combination sqlite-migrate and the table.transform() method in sqlite-utils offers a pretty robust solution to this problem. Clearly I need to put more work into promoting it!

Homebrew trouble for LLM

I started getting confusing bug reports for my various LLM projects, all of which boiled down to a failure to install plugins that depended on PyTorch.

It turns out the LLM package for Homebrew upgraded to Python 3.12 last week... but PyTorch isn't yet available for Python 3.12.

This means that while base LLM installed from Homebrew works fine, attempts to install things like my new llm-embed-jina plugin fail with weird errors.

I'm not sure the best way to address this. For the moment I've removed the recommendation to install using Homebrew and replaced it with pipx in a few places. I have an open issue to find a better solution for this.

The difficulty of debugging this issue prompted me to ship a new plugin that I've been contemplating for a while: llm-python.

Installing this plugin adds a new llm python command, which runs a Python interpreter in same virtual environment as LLM - useful for if you installed LLM via pipx or Homebrew and don't know where that virtual environment is located.

It's great for debugging: I can ask people to run llm python -c 'import sys; print(sys.path)' for example to figure out what their Python path looks like.

It's also promising as a tool for future tutorials about the LLM Python library. I can tell people to pipx install llm and then run llm python to get a Python interpreter with the library already installed, without them having to mess around with virtual environments directly.

Add and remove indexes in datasette-edit-schema

We're iterating on Datasette Cloud based on feedback from people using the preview. One request was the ability to add and remove indexes from larger tables, to help speed up faceting.

datasette-edit-schema 0.7 adds that feature.

That plugin includes this script for automatically updating the screenshot in the README using shot-scraper. Here's the latest result:

Releases this week

sqlite-migrate 0.1b0 - 2023-10-27

A simple database migration system for SQLite, based on sqlite-utilsllm-python 0.1 - 2023-10-27

"llm python" is a command to run a Python interpreter in the LLM virtual environmentllm-embed-jina 0.1.2 - 2023-10-26

Embedding models from Jina AIdatasette-edit-schema 0.7 - 2023-10-26

Datasette plugin for modifying table schemasdatasette-ripgrep 0.8.2 - 2023-10-25

Web interface for searching your code using ripgrep, built as a Datasette pluginllm-gpt4all 0.2 - 2023-10-24

Plugin for LLM adding support for the GPT4All collection of models

TIL this week

A simple two column CSS grid - 2023-10-27

Serving a custom vector web map using PMTiles and maplibre-gl - 2023-10-24

Serving a JavaScript project built using Vite from GitHub Pages - 2023-10-24

Link 2023-10-27 Making PostgreSQL tick: New features in pg_cron: pg_cron adds cron-style scheduling directly to PostgreSQL. It's a pretty mature extension at this point, and recently gained the ability to schedule repeating tasks at intervals as low as every 1s.

The examples in this post are really informative. I like this example, which cleans up the ever-growing cron.job_run_details table by using pg_cron itself to run the cleanup:

SELECT cron.schedule('delete-job-run-details', '0 12 * * *', $$DELETE FROM cron.job_run_details WHERE end_time < now() - interval '3 days'$$);

pg_cron can be used to schedule functions written in PL/pgSQL, which is a great example of the kind of DSL that I used to avoid but I'm now much happier to work with because I know GPT-4 can write basic examples for me and help me understand exactly what unfamiliar code is doing.

TIL 2023-10-27 A simple two column CSS grid:

For my blog entry today Now add a walrus: Prompt engineering in DALL-E 3 I wanted to display little grids of 2x2 images along with their captions. …

Quote 2023-10-27

The thing nobody talks about with engineering management is this:

Every 3-4 months every person experiences some sort of personal crisis. A family member dies, they have a bad illness, they get into an argument with another person at work, etc. etc. Sadly, that is just life. Normally after a month or so things settle down and life goes on.

But when you are managing 6+ people it means there is *always* a crisis you are helping someone work through. You are always carrying a bit of emotional burden or worry around with you.

Link 2023-10-30 Through the Ages: Apple CPU Architecture: I enjoyed this review of Apple's various CPU migrations - Motorola 68k to PowerPC to Intel x86 to Apple Silicon - by Jacob Bartlett.

Link 2023-10-31 Microsoft announces new Copilot Copyright Commitment for customers: Part of an interesting trend where some AI vendors are reassuring their paying customers by promising legal support in the face of future legal threats:

"As customers ask whether they can use Microsoft’s Copilot services and the output they generate without worrying about copyright claims, we are providing a straightforward answer: yes, you can, and if you are challenged on copyright grounds, we will assume responsibility for the potential legal risks involved."

Link 2023-10-31 I’m Sorry I Bit You During My Job Interview: The way this 2011 McSweeney’s piece by Tom O’Donnell escalates is delightful.

Link 2023-10-31 Our search for the best OCR tool in 2023, and what we found: DocumentCloud's Sanjin Ibrahimovic reviews the best options for OCR. Tesseract scores highly for easily machine readable text, newcomer docTR is great for ease of use but still not great at handwriting. Amazon Textract is great for everything except non-Latin languages, Google Cloud Vision is great at pretty much everything except for ease-of-use. Azure AI Document Intelligence sounds worth considering as well.

Link 2023-10-31 My User Experience Porting Off setup.py: PyOxidizer maintainer Gregory Szorc provides a detailed account of his experience trying to figure out how to switch from setup.py to pyproject.toml for his zstandard Python package.

This kind of detailed usability feedback is incredibly valuable for project maintainers, especially when the user encountered this many different frustrations along the way. It's like the written version of a detailed usability testing session.

Link 2023-11-01 SQLite 3.44: Interactive release notes: Anton Zhiyanov compiled interactive release notes for the new release of SQLite, demonstrating several of the new features. I'm most excited about order by in aggregates - group_concat(name order by name desc) - which is something I've wanted in the past. Anton demonstrates how it works with JSON aggregate functions as well. The new date formatting options look useful as well.

Link 2023-11-01 Tracking SQLite Database Changes in Git: A neat trick from Garrit Franke that I hadn't seen before: you can teach "git diff" how to display human readable versions of the differences between binary files with a specific extension using the following:

git config diff.sqlite3.binary true

git config diff.sqlite3.textconv "echo .dump | sqlite3"

That way you can store binary files in your repo but still get back SQL diffs to compare them.

I still worry about the efficiency of storing binary files in Git, since I expect multiple versions of a text text file to compress together better.

Link 2023-11-04 Hacking Google Bard - From Prompt Injection to Data Exfiltration: Bard recently grew extension support, allowing it access to a user's personal documents. Here's the first reported prompt injection attack against that.

This kind of attack against LLM systems is inevitable any time you combine access to private data with exposure to untrusted inputs. In this case the attack vector is a Google Doc shared with the user, containing prompt injection instructions that instruct the model to encode previous data into an URL and exfiltrate it via a markdown image.

Google's CSP headers restrict those images to *.google.com - but it turns out you can use Google AppScript to run your own custom data exfiltration endpoint on script.google.com.

Google claim to have fixed the reported issue - I'd be interested to learn more about how that mitigation works, and how robust it is against variations of this attack.

Link 2023-11-04 YouTube: OpenAssistant is Completed - by Yannic Kilcher: The OpenAssistant project was an attempt to crowdsource the creation of an alternative to ChatGPT, using human volunteers to build a Reinforcement Learning from Human Feedback (RLHF) dataset suitable for training this kind of model.

The project started in January. In this video from 24th October project founder Yannic Kilcher announces that the project is now shutting down.

They've declared victory in that the dataset they collected has been used by other teams as part of their training efforts, but admit that the overhead of running the infrastructure and moderation teams necessary for their project is more than they can continue to justify.

Link 2023-11-05 Stripe: Online migrations at scale: This 2017 blog entry from Jacqueline Xu at Stripe provides a very clear description of the "dual writes" pattern for applying complex data migrations without downtime: dual write to new and old tables, update the read paths, update the write paths and finally remove the now obsolete data - illustrated with an example of upgrading customers from having a single to multiple subscriptions.

Link 2023-11-05 See the History of a Method with git log -L: Neat Git trick from Caleb Hearth that I hadn't seen before, and it works for Python out of the box:

git log -L :path_with_format:__init__.py

That command displays a log (with diffs) of just the portion of commits that changed the path_with_format function in the __init__.py file.

Quote 2023-11-05

One of my fav early Stripe rules was from incident response comms: do not publicly blame an upstream provider. We chose the provider, so own the results—and use any pain from that as extra motivation to invest in redundant services, go direct to the source, etc.