OpenAI DevDay: Let’s build developer tools, not digital God

News from OpenAI’s developer platform event

In this newsletter:

OpenAI DevDay: Let’s build developer tools, not digital God

Weeknotes: Three podcasts, two trips and a new plugin system

Plus 6 links and 3 quotations and 1 TIL

OpenAI DevDay: Let’s build developer tools, not digital God - 2024-10-02

I had a fun time live blogging OpenAI DevDay yesterday - I’ve now shared notes about the live blogging system I threw other in a hurry on the day (with assistance from Claude and GPT-4o). Now that the smoke has settled a little, here are my impressions from the event.

Compared to last year

Comparison with the first DevDay in November 2023 are unavoidable. That event was much more keynote-driven: just in the keynote OpenAI released GPT-4 vision, and Assistants, and GPTs, and GPT-4 Turbo (with a massive price drop), and their text-to-speech API. It felt more like a launch-focused product event than something explicitly for developers.

This year was different. Media weren’t invited, there was no livestream, Sam Altman didn’t present the opening keynote (he was interviewed at the end of the day instead) and the new features, while impressive, were not as abundant.

Several features were released in the last few months that could have been saved for DevDay: GPT-4o mini and the o1 model family are two examples. I’m personally happy that OpenAI are shipping features like that as they become ready rather than holding them back for an event.

I’m a bit surprised they didn’t talk about Whisper Turbo at the conference though, released just the day before - especially since that’s one of the few pieces of technology they release under an open source (MIT) license.

This was clearly intended as an event by developers, for developers. If you don’t build software on top of OpenAI’s platform there wasn’t much to catch your attention here.

As someone who does build software on top of OpenAI, there was a ton of valuable and interesting stuff.

Prompt caching, aka the big price drop

I was hoping we might see a price drop, seeing as there’s an ongoing pricing war between Gemini, Anthropic and OpenAI. We got one in an interesting shape: a 50% discount on input tokens for prompts with a shared prefix.

This isn’t a new idea: both Google Gemini and Claude offer a form of prompt caching discount, if you configure them correctly and make smart decisions about when and how the cache should come into effect.

The difference here is that OpenAI apply the discount automatically:

API calls to supported models will automatically benefit from Prompt Caching on prompts longer than 1,024 tokens. The API caches the longest prefix of a prompt that has been previously computed, starting at 1,024 tokens and increasing in 128-token increments. If you reuse prompts with common prefixes, we will automatically apply the Prompt Caching discount without requiring you to make any changes to your API integration.

50% off repeated long prompts is a pretty significant price reduction!

Anthropic's Claude implementation saves more money: 90% off rather than 50% - but is significantly more work to put into play.

Gemini’s caching requires you to pay per hour to keep your cache warm which makes it extremely difficult to effectively build against in comparison to the other two.

It's worth noting that OpenAI are not the first company to offer automated caching discounts: DeepSeek have offered that through their API for a few months.

GPT-4o audio via the new WebSocket Realtime API

Absolutely the biggest announcement of the conference: the new Realtime API is effectively the API version of ChatGPT advanced voice mode, a user-facing feature that finally rolled out to everyone just a week ago.

This means we can finally tap directly into GPT-4o’s multimodal audio support: we can send audio directly into the model (without first transcribing it to text via something like Whisper), and we can have it directly return speech without needing to run a separate text-to-speech model.

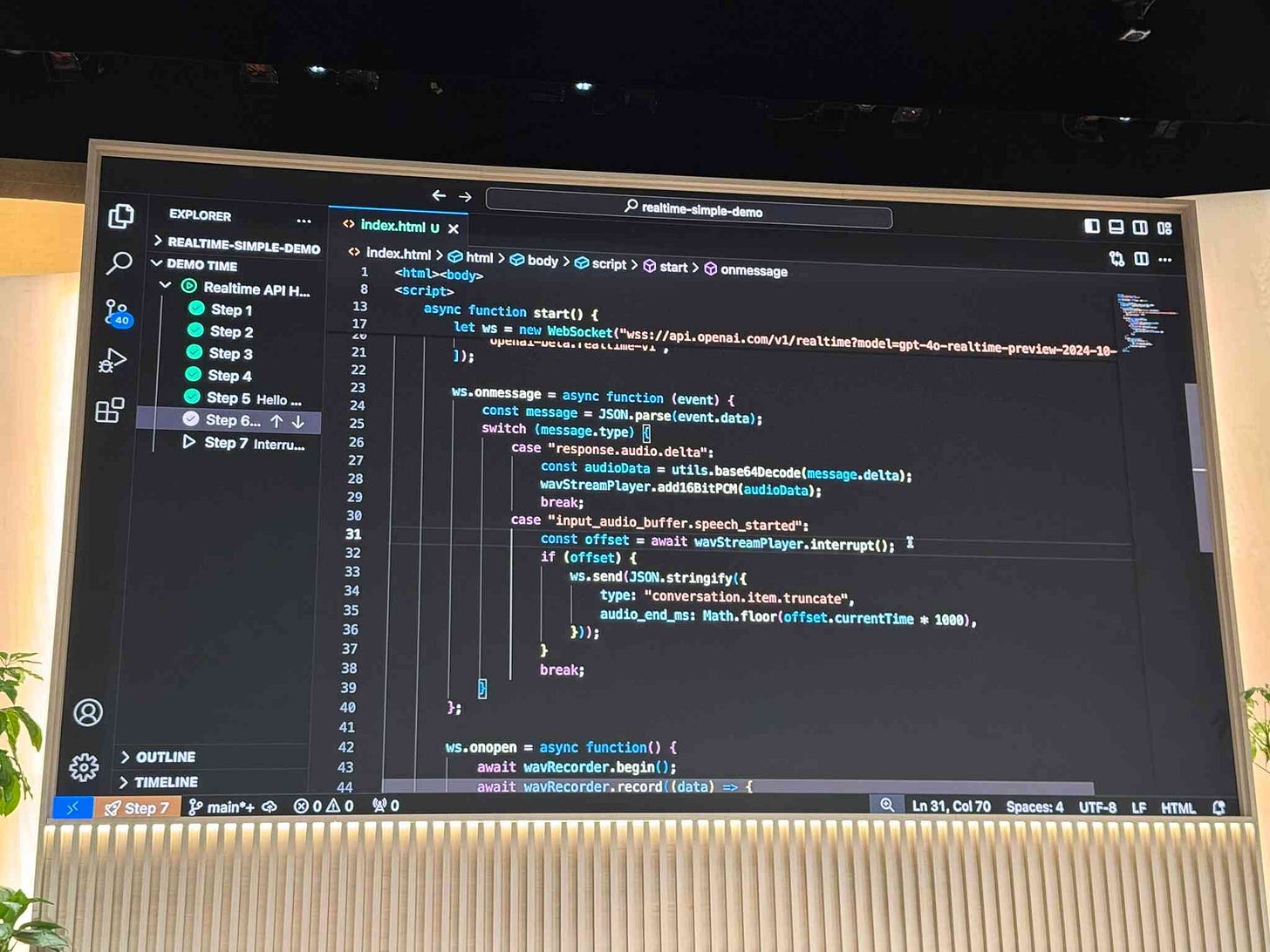

The way they chose to expose this is interesting: it’s not (yet) part of their existing chat completions API, instead using an entirely new API pattern built around WebSockets.

They designed it like that because they wanted it to be as realtime as possible: the API lets you constantly stream audio and text in both directions, and even supports allowing users to speak over and interrupt the model!

So far the Realtime API supports text, audio and function call / tool usage - but doesn't (yet) support image input (I've been assured that's coming soon). The combination of audio and function calling is super exciting alone though - several of the demos at DevDay used these to build fun voice-driven interactive web applications.

I like this WebSocket-focused API design a lot. My only hesitation is that, since an API key is needed to open a WebSocket connection, actually running this in production involves spinning up an authenticating WebSocket proxy. I hope OpenAI can provide a less code-intensive way of solving this in the future.

Code they showed during the event demonstrated using the native browser WebSocket class directly, but I can't find those code examples online now. I hope they publish it soon. For the moment the best things to look at are the openai-realtime-api-beta and openai-realtime-console repositories.

The new playground/realtime debugging tool - the OpenAI playground for the Realtime API - is a lot of fun to try out too.

Model distillation is fine-tuning made much easier

The other big developer-facing announcements were around model distillation, which to be honest is more of a usability enhancement and minor rebranding of their existing fine-tuning features.

OpenAI have offered fine-tuning for a few years now, most recently against their GPT-4o and GPT-4o mini models. They’ve practically been begging people to try it out, offering generous free tiers in previous months:

Today [August 20th 2024] we’re launching fine-tuning for GPT-4o, one of the most requested features from developers. We are also offering 1M training tokens per day for free for every organization through September 23.

That free offer has now been extended. A footnote on the pricing page today:

Fine-tuning for GPT-4o and GPT-4o mini is free up to a daily token limit through October 31, 2024. For GPT-4o, each qualifying org gets up to 1M complimentary training tokens daily and any overage will be charged at the normal rate of $25.00/1M tokens. For GPT-4o mini, each qualifying org gets up to 2M complimentary training tokens daily and any overage will be charged at the normal rate of $3.00/1M tokens

The problem with fine-tuning is that it’s reallyhard to do effectively. I tried it a couple of years ago myself against GPT-3 - just to apply tags to my blog content - and got disappointing results which deterred me from spending more money iterating on the process.

To fine-tune a model effectively you need to gather a high quality set of examples and you need to construct a robust set of automated evaluations. These are some of the most challenging (and least well understood) problems in the whole nascent field of prompt engineering.

OpenAI’s solution is a bit of a rebrand. “Model distillation” is a form of fine-tuning where you effectively teach a smaller model how to do a task based on examples generated by a larger model. It’s a very effective technique. Meta recently boasted about how their impressive Llama 3.2 1B and 3B models were “taught” by their larger models:

[...] powerful teacher models can be leveraged to create smaller models that have improved performance. We used two methods—pruning and distillation—on the 1B and 3B models, making them the first highly capable lightweight Llama models that can fit on devices efficiently.

Yesterday OpenAI released two new features to help developers implement this pattern.

The first is stored completions. You can now pass a "store": true parameter to have OpenAI permanently store your prompt and its response in their backend, optionally with your own additional tags to help you filter the captured data later.

You can view your stored completions at platform.openai.com/chat-completions.

I’ve been doing effectively the same thing with my LLM command-line tool logging to a SQLite database for over a year now. It's a really productive pattern.

OpenAI pitch stored completions as a great way to collect a set of training data from their large models that you can later use to fine-tune (aka distill into) a smaller model.

The second, even more impactful feature, is evals. You can now define and run comprehensive prompt evaluations directly inside the OpenAI platform.

OpenAI’s new eval tool competes directly with a bunch of existing startups - I’m quite glad I didn’t invest much effort in this space myself!

The combination of evals and stored completions certainly seems like it should make the challenge of fine-tuning a custom model far more tractable.

The other fine-tuning an announcement, greeted by applause in the room, was fine-tuning for images. This has always felt like one of the most obviously beneficial fine-tuning use-cases for me, since it’s much harder to get great image recognition results from sophisticated prompting alone.

From a strategic point of view this makes sense as well: it has become increasingly clear over the last year that many prompts are inherently transferable between models - it’s very easy to take an application with prompts designed for GPT-4o and switch it to Claude or Gemini or Llama with few if any changes required.

A fine-tuned model on the OpenAI platform is likely to be far more sticky.

Let’s build developer tools, not digital God

In the last session of the day I furiously live blogged the Fireside Chat between Sam Altman and Kevin Weil, trying to capture as much of what they were saying as possible.

A bunch of the questions were about AGI. I’m personally quite uninterested in AGI: it’s always felt a bit too much like science fiction for me. I want useful AI-driven tools that help me solve the problems I want to solve.

One point of frustration: Sam referenced OpenAI’s five-level framework a few times. I found several news stories (many paywalled - here's one that isn't) about it but I can’t find a definitive URL on an OpenAI site that explains what it is! This is why you should always Give people something to link to so they can talk about your features and ideas.

Both Sam and Kevin seemed to be leaning away from AGI as a term. From my live blog notes (which paraphrase what was said unless I use quotation marks):

Sam says they're trying to avoid the term now because it has become so over-loaded. Instead they think about their new five steps framework.

"I feel a little bit less certain on that" with respect to the idea that an AGI will make a new scientific discovery.

Kevin: "There used to be this idea of AGI as a binary thing [...] I don't think that's how think about it any more".

Sam: Most people looking back in history won't agree when AGI happened. The turing test wooshed past and nobody cared.

I for one found this very reassuring. The thing I want from OpenAI is more of what we got yesterday: I want platform tools that I can build unique software on top of which I colud not have built previously.

If the ongoing, well-documented internal turmoil at OpenAI from the last year is a result of the organization reprioritizing towards shipping useful, reliable tools for developers (and consumers) over attempting to build a digital God, then I’m all for it.

And yet… OpenAI just this morning finalized a raise of another $6.5 billion dollars at a staggering $157 billion post-money valuation. That feels more like a digital God valuation to me than a platform for developers in an increasingly competitive space.

Weeknotes: Three podcasts, two trips and a new plugin system - 2024-09-30

I fell behind a bit on my weeknotes. Here's most of what I've been doing in September.

Lisbon, Portugal and Durham, North Carolina

I had two trips this month. The first was a short visit to Lisbon, Portugal for the Python Software Foundation's annual board retreat. This inspired me to write about Things I've learned serving on the board of the Python Software Foundation.

The second was to Durham, North Carolina for DjangoCon US 2024. I wrote about that one in Themes from DjangoCon US 2024.

My talk at DjangoCon was about plugin systems, and in a classic example of conference-driven development I ended up writing and releasing a new plugin system for Django in preparation for that talk. I introduced that in DJP: A plugin system for Django.

Podcasts

I haven't been a podcast guest since January, and then three came along at once! All three appearences involved LLMs in some way but I don't think there was a huge amount of overlap in terms of what I actually said.

I went on The Software Misadventures Podcast to talk about my career to-date.

My appearance on TWIML dug into ways in which I use Claude and ChatGPT to help me write code.

I was the guest for the inaugral episode of Gergely Orosz's Pragmatic Engineer Podcast, which ended up touching on a whole array of different topics relevant to modern software engineering, from the importance of open source to the impact AI tools are likely to have on our industry.

Gergely has been sharing neat edited snippets from our conversation on Twitter. Here's one on RAG and another about how open source has been the the biggest productivity boost of my career.

On the blog

NotebookLM's automatically generated podcasts are surprisingly effective - Sept. 29, 2024

Themes from DjangoCon US 2024 - Sept. 27, 2024

DJP: A plugin system for Django - Sept. 25, 2024

Notes on using LLMs for code - Sept. 20, 2024

Things I've learned serving on the board of the Python Software Foundation - Sept. 18, 2024

Notes on OpenAI's new o1 chain-of-thought models - Sept. 12, 2024

Notes from my appearance on the Software Misadventures Podcast - Sept. 10, 2024

Teresa T is name of the whale in Pillar Point Harbor near Half Moon Bay - Sept. 8, 2024

Museums

Releases

shot-scraper 1.5 - 2024-09-27

A command-line utility for taking automated screenshots of websitesdjango-plugin-datasette 0.2 - 2024-09-26

Django plugin to run Datasette inside of Djangodjp 0.3.1 - 2024-09-26

A plugin system for Djangollm-gemini 0.1a5 - 2024-09-24

LLM plugin to access Google's Gemini family of modelsdjango-plugin-blog 0.1.1 - 2024-09-24

A blog for Django as a DJP plugin.django-plugin-database-url 0.1 - 2024-09-24

Django plugin for reading the DATABASE_URL environment variabledjango-plugin-django-header 0.1.1 - 2024-09-23

Add a Django-Compositions HTTP header to a Django appllm-jina-api 0.1a0 - 2024-09-20

Access Jina AI embeddings via their APIllm 0.16 - 2024-09-12

Access large language models from the command-linedatasette-acl 0.4a4 - 2024-09-10

Advanced permission management for Datasettellm-cmd 0.2a0 - 2024-09-09

Use LLM to generate and execute commands in your shellfiles-to-prompt 0.3 - 2024-09-09

Concatenate a directory full of files into a single prompt for use with LLMsjson-flatten 0.3.1 - 2024-09-07

Python functions for flattening a JSON object to a single dictionary of pairs, and unflattening that dictionary back to a JSON objectcsv-diff 1.2 - 2024-09-06

Python CLI tool and library for diffing CSV and JSON filesdatasette 1.0a16 - 2024-09-06

An open source multi-tool for exploring and publishing datadatasette-search-all 1.1.4 - 2024-09-06

Datasette plugin for searching all searchable tables at once

TILs

How streaming LLM APIs work - 2024-09-21

Quote 2024-09-30

But in terms of the responsibility of journalism, we do have intense fact-checking because we want it to be right. Those big stories are aggregations of incredible journalism. So it cannot function without journalism. Now, we recheck it to make sure it's accurate or that it hasn't changed, but we're building this to make jokes. It's just we want the foundations to be solid or those jokes fall apart. Those jokes have no structural integrity if the facts underneath them are bullshit.

Link 2024-09-30 llama-3.2-webgpu:

Llama 3.2 1B is a really interesting models, given its 128,000 token input and its tiny size (barely more than a GB).

This page loads a 1.24GB q4f16 ONNX build of the Llama-3.2-1B-Instruct model and runs it with a React-powered chat interface directly in the browser, using Transformers.js and WebGPU. Source code for the demo is here.

It worked for me just now in Chrome; in Firefox and Safari I got a “WebGPU is not supported by this browser” error message.

Link 2024-09-30 Conflating Overture Places Using DuckDB, Ollama, Embeddings, and More:

Drew Breunig's detailed tutorial on "conflation" - combining different geospatial data sources by de-duplicating address strings such as RESTAURANT LOS ARCOS,3359 FOOTHILL BLVD,OAKLAND,94601 and LOS ARCOS TAQUERIA,3359 FOOTHILL BLVD,OAKLAND,94601.

Drew uses an entirely offline stack based around Python, DuckDB and Ollama and finds that a combination of H3 geospatial tiles and mxbai-embed-large embeddings (though other embedding models should work equally well) gets really good results.

Quote 2024-09-30

I listened to the whole 15-minute podcast this morning. It was, indeed, surprisingly effective. It remains somewhere in the uncanny valley, but not at all in a creepy way. Just more in a “this is a bit vapid and phony” way. [...] But ultimately the conversation has all the flavor of a bowl of unseasoned white rice.

Link 2024-09-30 Bop Spotter:

Riley Walz: "I installed a box high up on a pole somewhere in the Mission of San Francisco. Inside is a crappy Android phone, set to Shazam constantly, 24 hours a day, 7 days a week. It's solar powered, and the mic is pointed down at the street below."

Some details on how it works from Riley on Twitter:

The phone has a Tasker script running on loop (even if the battery dies, it’ll restart when it boots again)

Script records 10 min of audio in airplane mode, then comes out of airplane mode and connects to nearby free WiFi.

Then uploads the audio file to my server, which splits it into 15 sec chunks that slightly overlap. Passes each to Shazam’s API (not public, but someone reverse engineered it and made a great Python package). Phone only uses 2% of power every hour when it’s not charging!

Quote 2024-10-01

[Reddit is] mostly ported over entirely to Lit now. There are a few straggling pages that we're still working on, but most of what everyday typical users see and use is now entirely Lit based. This includes both logged out and logged in experiences.

Link 2024-10-01 Whisper large-v3-turbo model:

It’s OpenAI DevDay today. Last year they released a whole stack of new features, including GPT-4 vision and GPTs and their text-to-speech API, so I’m intrigued to see what they release today (I’ll be at the San Francisco event).

Looks like they got an early start on the releases, with the first new Whisper model since November 2023.

Whisper Turbo is a new speech-to-text model that fits the continued trend of distilled models getting smaller and faster while maintaining the same quality as larger models.

large-v3-turbo is 809M parameters - slightly larger than the 769M medium but significantly smaller than the 1550M large. OpenAI claim its 8x faster than large and requires 6GB of VRAM compared to 10GB for the larger model.

The model file is a 1.6GB download. OpenAI continue to make Whisper (both code and model weights) available under the MIT license.

It’s already supported in both Hugging Face transformers - live demo here - and in mlx-whisper on Apple Silicon, via Awni Hannun:

import mlx_whisper

print(mlx_whisper.transcribe(

"path/to/audio",

path_or_hf_repo="mlx-community/whisper-turbo"

)["text"])

Awni reports:

Transcribes 12 minutes in 14 seconds on an M2 Ultra (~50X faster than real time).

Link 2024-10-02 Ethical Applications of AI to Public Sector Problems:

Jacob Kaplan-Moss developed this model a few years ago (before the generative AI rush) while working with public-sector startups and is publishing it now. He starts by outright dismissing the snake-oil infested field of “predictive” models:

It’s not ethical to predict social outcomes — and it’s probably not possible. Nearly everyone claiming to be able to do this is lying: their algorithms do not, in fact, make predictions that are any better than guesswork. […] Organizations acting in the public good should avoid this area like the plague, and call bullshit on anyone making claims of an ability to predict social behavior.

Jacob then differentiates assistive AI and automated AI. Assistive AI helps human operators process and consume information, while leaving the human to take action on it. Automated AI acts upon that information without human oversight.

His conclusion: yes to assistive AI, and no to automated AI:

All too often, AI algorithms encode human bias. And in the public sector, failure carries real life or death consequences. In the private sector, companies can decide that a certain failure rate is OK and let the algorithm do its thing. But when citizens interact with their governments, they have an expectation of fairness, which, because AI judgement will always be available, it cannot offer.

On Mastodon I said to Jacob:

I’m heavily opposed to anything where decisions with consequences are outsourced to AI, which I think fits your model very well

(somewhat ironic that I wrote this message from the passenger seat of my first ever Waymo trip, and this weird car is making extremely consequential decisions dozens of times a second!)

Which sparked an interesting conversation about why life-or-death decisions made by self-driving cars feel different from decisions about social services. My take on that:

I think it’s about judgement: the decisions I care about are far more deep and non-deterministic than “should I drive forward or stop”.

Where there’s moral ambiguity, I want a human to own the decision both so there’s a chance for empathy, and also for someone to own the accountability for the choice.

That idea of ownership and accountability for decision making feels critical to me. A giant black box of matrix multiplication cannot take accountability for “decisions” that it makes.

Great post, thank you! I'm a new reader after hearing the pragmatic engineer podcast and I'm already a fan.

In regards to the tools v. digital god discussion; have you read Yuval Harari's "Nexus"? I just finished listening to it on audible and as another proponent of AI tools, I have to say it's the first thing that's really given me pause regarding potential dangers of generative AIs -without even considering AGI. I was blown away and would love to hear/read your thoughts on the book if you pick it up.

Can you provide links to great articles that quote use cases, show how the OpenAI was leveraged, share git hubcode, etc.?