LLM now provides tools for working with embeddings

Plus: Joel Kang's "Practical guide to deploying Large Language Models Cheap, Good *and* Fast"

In this newsletter:

LLM now provides tools for working with embeddings

Plus 3 links and 1 TIL

LLM now provides tools for working with embeddings - 2023-09-04

LLM is my Python library and command-line tool for working with language models. I just released LLM 0.9 with a new set of features that extend LLM to provide tools for working with embeddings.

This is a long post with a lot of theory and background. If you already know what embeddings are, here's a TLDR you can try out straight away:

# Install LLM

pip install llm

# If you already installed via Homebrew/pipx you can upgrade like this:

llm install -U llm

# Install the llm-sentence-transformers plugin

llm install llm-sentence-transformers

# Install the all-MiniLM-L6-v2 embedding model

llm sentence-transformers register all-MiniLM-L6-v2

# Generate and store embeddings for every README.md in your home directory, recursively

llm embed-multi readmes \

--model sentence-transformers/all-MiniLM-L6-v2 \

--files ~/ '**/README.md'

# Add --store to store the text content as well

# Run a similarity search for "sqlite" against those embeddings

llm similar readmes -c sqliteFor everyone else, read on and the above example should hopefully all make sense.

Embeddings

Embeddings are a fascinating concept within the larger world of language models.

I explained embeddings in my recent talk, Making Large Language Models work for you. The relevant section of the slides and transcript is here, or you can jump to that section on YouTube.

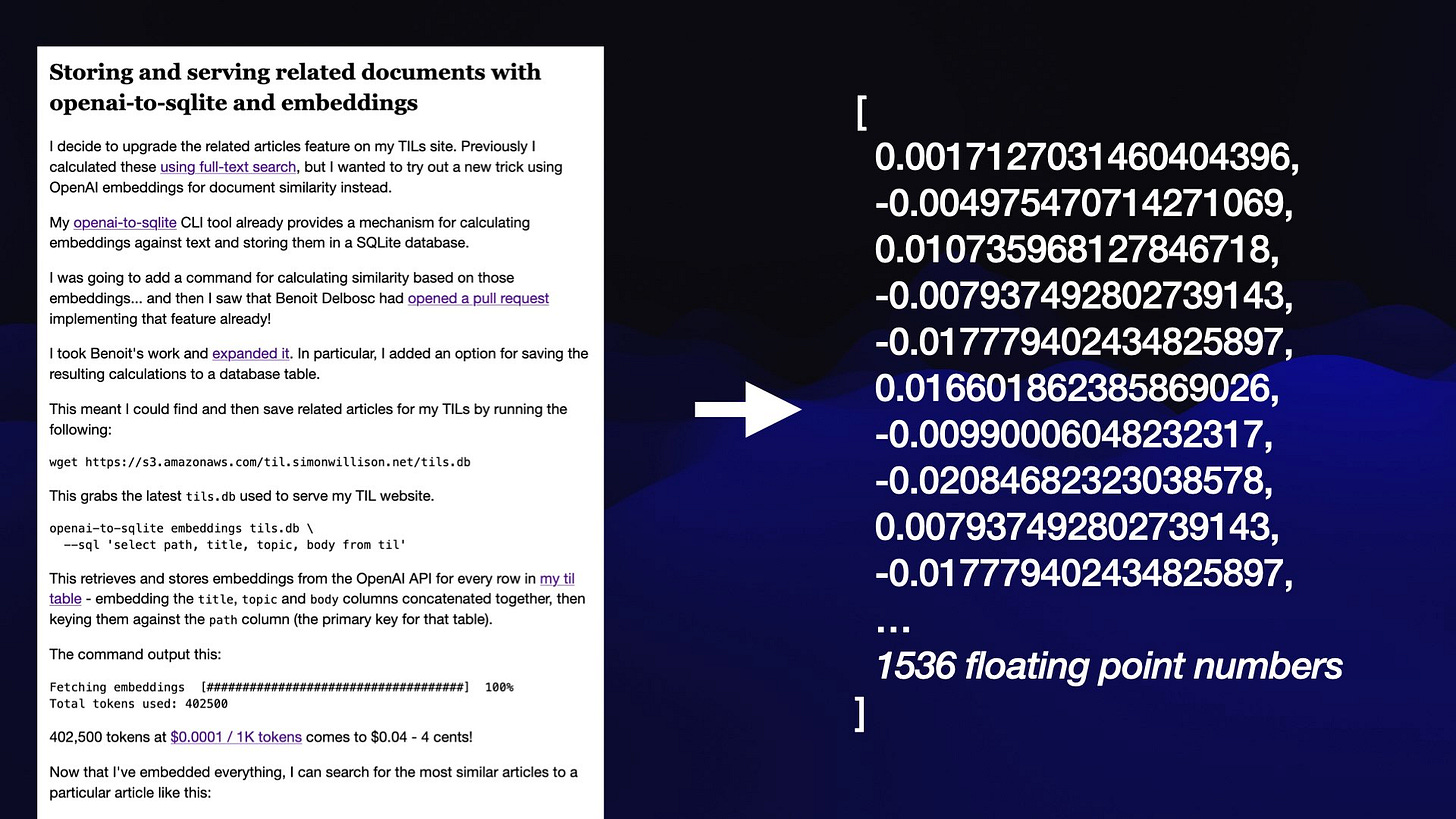

An embedding model lets you take a string of text - a word, sentence, paragraph or even a whole document - and turn that into an array of floating point numbers called an embedding vector.

A model will always produce the same length of array - 1,536 numbers for the OpenAI embedding model, 384 for all-MiniLM-L6-v2 - but the array itself is inscrutable. What are you meant to do with it?

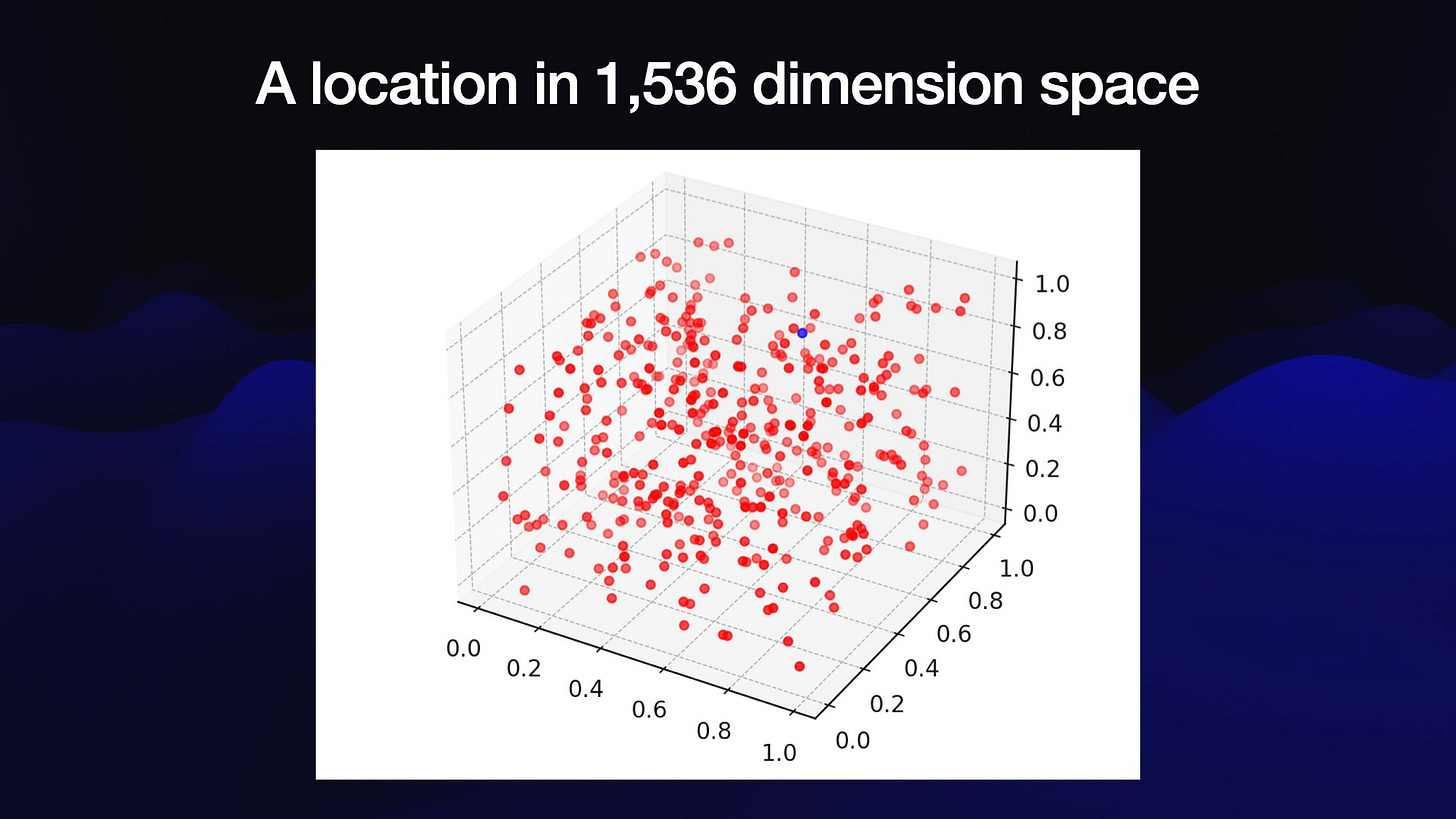

The answer is that you can compare them. I like to think of an embedding vector as a location in 1,536-dimensional space. The distance between two vectors is a measure of how semantically similar they are in meaning, at least according to the model that produced them.

"One happy dog" and "A playful hound" will end up close together, even though they don't share any keywords. The embedding vector represents the language model's interpretation of the meaning of the text.

Things you can do with embeddings include:

Find related items. I use this on my TIL site to display related articles, as described in Storing and serving related documents with openai-to-sqlite and embeddings.

Build semantic search. As shown above, an embeddings-based search engine can find content relevant to the user's search term even if none of the keywords match.

Implement retrieval augmented generation - the trick where you take a user's question, find relevant documentation in your own corpus and use that to get an LLM to spit out an answer. More on that here.

Clustering: you can find clusters of nearby items and identify patterns in a corpus of documents.

LLM's new embedding features

My goal with LLM is to provide a plugin-driven abstraction around a growing collection of language models. I want to make installing, using and comparing these models as easy as possible.

The new release adds several command-line tools for working with embeddings, plus a new Python API for working with embeddings in your own code.

It also adds support for installing additional embedding models via plugins. I've released one plugin for this so far: llm-sentence-transformers, which adds support for new models based on the sentence-transformers library.

The example above shows how to use sentence-transformers. LLM also supports API-driven access to the OpenAI ada-002 model.

Here's how to embed some text using ada-002, assuming you have installed LLM already:

# Set your OpenAI API key

llm keys set openai

# <paste key here>

# Embed some text

llm embed -m ada-002 -c "Hello world"This will output a huge JSON list of floating point numbers to your terminal. You can add -f base64 (or -f hex) to get that back in a different format, though none of these outputs are instantly useful.

Embeddings are much more interesting when you store them.

LLM already uses SQLite to store prompts and responses. It was a natural fit to use SQLite to store embeddings as well.

Embedding collections

LLM 0.9 introduces the concept of a collection of embeddings. A collection has a name - like readmes - and contains a set of embeddings, each of which has an ID and an embedding vector.

All of the embeddings in a collection are generated by the same model, to ensure they can be compared with each others.

The llm embed command can store the vector in the database instead of returning it to the console. Pass it the name of an existing (or to-be-created) collection and the ID to use to store the embedding.

Here we'll store the embedding for the phrase "Hello world" in a collection called phrases with the ID hello, using that ada-002 embedding model:

llm embed phrases hello -m ada-002 -c "Hello world"Future phrases can be added without needing to specify the model again, since it is remembered by the collection:

llm embed phrases goodbye -c "Goodbye world"The llm embed-db collections shows a list of collections:

phrases: ada-002

2 embeddings

readmes: sentence-transformers/all-MiniLM-L6-v2

16796 embeddingsThe data is stored in a SQLite embeddings table with the following schema:

CREATE TABLE [collections] (

[id] INTEGER PRIMARY KEY,

[name] TEXT,

[model] TEXT

);

CREATE TABLE "embeddings" (

[collection_id] INTEGER REFERENCES [collections]([id]),

[id] TEXT,

[embedding] BLOB,

[content] TEXT,

[content_hash] BLOB,

[metadata] TEXT,

[updated] INTEGER,

PRIMARY KEY ([collection_id], [id])

);

CREATE UNIQUE INDEX [idx_collections_name]

ON [collections] ([name]);

CREATE INDEX [idx_embeddings_content_hash]

ON [embeddings] ([content_hash]);By default this is the SQLite database at the location revealed by llm embed-db path, but you can pass --database my-embeddings.db to various LLM commands to use a different database.

Each embedding vector is stored as a binary BLOB in the embedding column, consisting of those floating point numbers packed together as 32 bit floats.

The content_hash column contains a MD5 hash of the content. This helps avoid re-calculating the embedding (which can cost actual money for API-based embedding models like ada-002) unless the content has changed.

The content column is usually null, but can contain a copy of the original text content if you pass the --store option to the llm embed command.

metadata can contain a JSON object with metadata, if you pass --metadata '{"json": "goes here"}.

You don't have to pass content using -c - you can instead pass a file path using the -i/--input option:

llm embed docs llm-setup -m ada-002 -i llm/docs/setup.mdOr pipe things to standard input like this:

cat llm/docs/setup.md | llm embed docs llm-setup -m ada-002 -i -Embedding similarity search

Once you've built a collection, you can search for similar embeddings using the llm similar command.

The -c "term" option will embed the text you pass in using the embedding model for the collection and use that as the comparison vector:

llm similar readmes -c sqliteYou can also pass the ID of an object in that collection to use that embedding instead. This gets you related documents, for example:

llm similar readmes sqlite-utils/README.mdThe output from this command is currently newline-delimited JSON.

Embedding in bulk

The llm embed command embeds a single string at a time. llm embed-multi is much more powerful: you can feed a CSV or JSON file, a SQLite database or even have it read from a directory of files in order to embed multiple items at once.

Many embeddings models are optimized for batch operations, so embedding multiple items at a time can provide a significant speed boost.

The embed-multi command is described in detail in the documentation. Here are a couple of fun things you can do with it.

First, I'm going to create embeddings for every single one of my Apple Notes.

My apple-notes-to-sqlite tool can export Apple Notes to a SQLite database. I'll run that first:

apple-notes-to-sqlite notes.dbThis took quite a while to run on my machine and generated a 828M SQLite database containing 6,462 records!

Next, I'm going to embed the content of all of those notes using the sentence-transformers/all-MiniLM-L6-v2 model:

llm embed-multi notes -d notes.db --sql 'select id, title, body from notesThis took around 15 minutes to run, and increased the size of my database by 13MB.

The --sql option here specifies a SQL query. The first column must be an id, then any subsequent columns will be concatenated together to form the content to embed.

In this case the embeddings are written back to the same notes.db database that the content came from.

And now I can run embedding similarity operations against all of my Apple notes!

llm similar notes -d notes.db -c 'ideas for blog posts'Embedding files in a directory

Let's revisit the example from the top of this post. In this case, I'm using the --files option to search for files on disk and embed each of them:

llm embed-multi readmes \

--model sentence-transformers/all-MiniLM-L6-v2 \

--files ~/ '**/README.md'The --files option takes two arguments: a path to a directory and a pattern to match against filenames. In this case I'm searching my home directory recursively for any files named README.md.

Running this command gives me embeddings for all of my README.md files, which I can then search against like this:

llm similar readmes -c sqliteEmbeddings in Python

So far I've only covered the command-line tools. LLM 0.9 also introduces a new Python API for working with embeddings.

There are two aspects to this. If you just want to embed content and handle the resulting vectors yourself, you can use llm.get_embedding_model():

import llm

# This takes model IDs and aliases defined by plugins:

model = llm.get_embedding_model("sentence-transformers/all-MiniLM-L6-v2")

vector = model.embed("This is text to embed")vector will then be a Python list of floating point numbers.

You can serialize that to the same binary format that LLM uses like this:

binary_vector = llm.encode(vector)

# And to deserialize:

vector = llm.decode(binary_vector)The second aspect of the Python API is the llm.Collection class, for working with collections of embeddings. This example code is quoted from the documentation:

import sqlite_utils

import llm

# This collection will use an in-memory database that will be

# discarded when the Python process exits

collection = llm.Collection("entries", model_id="ada-002")

# Or you can persist the database to disk like this:

db = sqlite_utils.Database("my-embeddings.db")

collection = llm.Collection("entries", db, model_id="ada-002")

# You can pass a model directly using model= instead of model_id=

embedding_model = llm.get_embedding_model("ada-002")

collection = llm.Collection("entries", db, model=embedding_model)

# Store a string in the collection with an ID:

collection.embed("hound", "my happy hound")

# Or to store content and extra metadata:

collection.embed(

"hound",

"my happy hound",

metadata={"name": "Hound"},

store=True

)

# Or embed things in bulk:

collection.embed_multi(

[

("hound", "my happy hound"),

("cat", "my dissatisfied cat"),

],

# Add this to store the strings in the content column:

store=True,

)As with everything else in LLM, the goal is that anything you can do with the CLI can be done with the Python API, and vice-versa.

Clustering with llm-cluster

Another interesting application of embeddings is that you can use them to cluster content - identifying patterns in a corpus of documents.

I've started exploring this area with a new plugin, called llm-cluster.

You can install it like this:

llm install llm-clusterLet's create a new collection using data pulled from GitHub. I'm going to import all of the LLM issues from the GitHub API, using my paginate-json tool:

paginate-json 'https://api.github.com/repos/simonw/llm/issues?state=all&filter=all' \

| jq '[.[] | {id: .id, title: .title}]' \

| llm embed-multi llm-issues - \

--database issues.db \

--model sentence-transformers/all-MiniLM-L6-v2 \

--storeRunning this gives me a issues.db SQLite database with 218 embeddings contained in a collection called llm-issues.

Now let's try out the llm-cluster command, requesting ten clusters from that collection:

llm cluster llm-issues --database issues.db 10The output from this command, truncated, looks like this:

[

{

"id": "0",

"items": [

{

"id": "1784149135",

"content": "Tests fail with pydantic 2"

},

{

"id": "1837084995",

"content": "Allow for use of Pydantic v1 as well as v2."

},

{

"id": "1857942721",

"content": "Get tests passing against Pydantic 1"

}

]

},

{

"id": "1",

"items": [

{

"id": "1724577618",

"content": "Better ways of storing and accessing API keys"

},

{

"id": "1772024726",

"content": "Support for `-o key value` options such as `temperature`"

},

{

"id": "1784111239",

"content": "`--key` should be used in place of the environment variable"

}

]

},

{

"id": "8",

"items": [

{

"id": "1835739724",

"content": "Bump the python-packages group with 1 update"

},

{

"id": "1848143453",

"content": "Python library support for adding aliases"

},

{

"id": "1857268563",

"content": "Bump the python-packages group with 1 update"

}

]

}

]These look pretty good! But wouldn't it be neat if we had a snappy title for each one?

The --summary option can provide exactly that, by piping the members of each cluster through a call to another LLM in order to generate a useful summary.

llm cluster llm-issues --database issues.db 10 --summaryThis uses gpt-3.5-turbo to generate a summary for each cluster, with this default prompt:

Short, concise title for this cluster of related documents.

The results I got back are pretty good, including:

Template Storage and Management Improvements

Package and Dependency Updates and Improvements

Adding Conversation Mechanism and Tools

I tried the same thing using a Llama 2 model running on my own laptop, with a custom prompt:

llm cluster llm-issues --database issues.db 10 \

--summary --model mlc-chat-Llama-2-13b-chat-hf-q4f16_1 \

--prompt 'Concise title for this cluster of related documents, just return the title'

I didn't quite get what I wanted! Llama 2 is proving a lot harder to prompt, so each cluster came back with something that looked like this:

Sure! Here's a concise title for this cluster of related documents:

"Design Improvements for the Neat Prompt System"

This title captures the main theme of the documents, which is to improve the design of the Neat prompt system. It also highlights the focus on improving the system's functionality and usability

llm-cluster only took a few hours to throw together, which I'm seeing as a positive indicator that the LLM library is developing in the right direction.

Future plans

The two future features I'm most excited about are indexing and chunking.

Indexing

The llm similar command and collection.similar() Python method currently use effectively the slowest brute force approach possible: calculate a cosine difference between input vector and every other embedding in the collection, then sort the results.

This works fine for collections with a few hundred items, but will start to suffer for collections of 100,000 or more.

There are plenty of potential ways of speeding this up: you can run a vector index like FAISS or hnswlib, use a database extension like sqlite-vss or pgvector, or turn to a hosted vector database like Pinecone or Milvus.

With this many potential solutions, the obvious answer for LLM is to address this with plugins.

I'm still thinking through the details, but the core idea is that users should be able to define an index against one or more collections, and LLM will then coordinate updates to that index. These may not happen in real-time - some indexes can be expensive to rebuild, so there are benefits to applying updates in batches.

I experimented with FAISS earlier this year in datasette-faiss. That's likely to be the base for my first implementation.

The embeddings table has an updated timestamp column to support this use-case - so indexers can run against just the items that have changed since the last indexing run.

Follow issue #216 for updates on this feature.

Chunking

When building an embeddings-based search engine, the hardest challenge is deciding how best to "chunk" the documents.

Users will type in short phrases or questions. The embedding for a four word question might not necessarily map closely to the embedding of a thousand word article, even if the article itself should be a good match for that query.

To maximize the chance of returning the most relevant content, we need to be smarter about what we embed.

I'm still trying to get a good feeling for the strategies that make sense here. Some that I've seen include:

Split a document up into fixed length shorter segments.

Split into segments but including a ~10% overlap with the previous and next segments, to reduce problems caused by words and sentences being split in a way that disrupts their semantic meaning.

Splitting by sentence, using NLP techniques.

Splitting into higher level sections, based on things like document headings.

Then there are more exciting, LLM-driven approaches:

Generate an LLM summary of a document and embed that.

Ask an LLM "What questions are answered by the following text?" and then embed each of the resulting questions!

It's possible to try out these different techniques using LLM already: write code that does the splitting, then feed the results to Collection.embed_multi() or llm embed-multi.

But... it would be really cool if LLM could split documents for you - with the splitting techniques themselves defined by plugins, to make it easy to try out new approaches.

Get involved

It should be clear by now that the potential scope of the LLM project is enormous. I'm trying to use plugins to tie together an enormous and rapidly growing ecosystem of models and techniques into something that's as easy for people to work with and build on as possible.

There are plenty of ways you can help!

Join the #llm Discord to talk about the project.

Try out plugins and run different models with them. There are 12 plugins already, and several of those can be used to run dozens if not hundreds of models (llm-mlc, llm-gpt4all and llm-llama-cpp in particular). I've hardly scratched the surface of these myself, and I'm testing exclusively on Apple Silicon. I'm really keen to learn more about which models work well, which models don't and which perform the best on different hardware.

Try building a plugin for a new model. My dream here is that every significant Large Language Model will have an LLM plugin that makes it easy to install and use.

Build stuff using LLM and let me know what you've built. Nothing fuels an open source project more than stories of cool things people have built with it.

Link 2023-08-30 excalidraw.com: Really nice browser-based editor for simple diagrams using a pleasing hand-sketched style, with the ability to export them as SVG or PNG.

TIL 2023-08-31 Remember to commit when using datasette.execute_write_fn():

I was writing some code for datasette-auth-tokens that used db.execute_write_fn() like this: …

Link 2023-09-04 A practical guide to deploying Large Language Models Cheap, Good *and* Fast: Joel Kang's extremely comprehensive notes on what he learned trying to run Vicuna-13B-v1.5 on an affordable cloud GPU server (a T4 at $0.615/hour). The space is in so much flux right now - Joel ended up using MLC but the best option could change any minute.

Vicuna 13B quantized to 4-bit integers needed 7.5GB of the T4's 16GB of VRAM, and returned tokens at 20/second.

An open challenge running MLC right now is around batching and concurrency: "I did try making 3 concurrent requests to the endpoint, and while they all stream tokens back and the server doesn’t OOM, the output of all 3 streams seem to actually belong to a single prompt."

Link 2023-09-04 Wikipedia search-by-vibes through millions of pages offline: Really cool demo by Lee Butterman, who built embeddings of 2 million Wikipedia pages and figured out how to serve them directly to the browser, where they are used to implement "vibes based" similarity search returning results in 250ms. Lots of interesting details about how he pulled this off, using Arrow as the file format and ONNX to run the model in the browser.