Lawyer cites fake cases invented by ChatGPT, judge is not amused

Plus links and quotes from the past week

In this newsletter:

Lawyer cites fake cases invented by ChatGPT, judge is not amused

Plus 13 links and 4 quotations and 4 TILs

Lawyer cites fake cases invented by ChatGPT, judge is not amused - 2023-05-27

Legal Twitter is having tremendous fun right now reviewing the latest documents from the case Mata v. Avianca, Inc. (1:22-cv-01461). Here's a neat summary:

So, wait. They file a brief that cites cases fabricated by ChatGPT. The court asks them to file copies of the opinions. And then they go back to ChatGPT and ask it to write the opinions, and then they file them?

Beth Wilensky, May 26 2023

Here's a New York Times story about what happened.

I'm very much not a lawyer, but I'm going to dig in and try to piece together the full story anyway.

The TLDR version

A lawyer asked ChatGPT for examples of cases that supported an argument they were trying to make.

ChatGPT, as it often does, hallucinated wildly - it invented several supporting cases out of thin air.

When the lawyer was asked to provide copies of the cases in question, they turned to ChatGPT for help again - and it invented full details of those cases, which they duly screenshotted and copied into their legal filings.

At some point, they asked ChatGPT to confirm that the cases were real... and ChatGPT said that they were. They included screenshots of this in another filing.

The judge is furious. Many of the parties involved are about to have a very bad time.

A detailed timeline

I pieced together the following from the documents on courtlistener.com:

Feb 22, 2022: The case was originally filed. It's a complaint about "personal injuries sustained on board an Avianca flight that was traveling from El Salvador to New York on August 27, 2019". There's a complexity here in that Avianca filed for chapter 11 bankruptcy on May 10th, 2020, which is relevant to the case (they emerged from bankruptcy later on).

Various back and forths take place over the next 12 months, many of them concerning if the bankruptcy "discharges all claims".

Mar 1st, 2023 is where things get interesting. This document was filed - "Affirmation in Opposition to Motion" - and it cites entirely fictional cases! One example quoted from that document (emphasis mine):

The United States Court of Appeals for the Eleventh Circuit specifically addresses the effect of a bankruptcy stay under the Montreal Convention in the case of Varghese v. China Southern Airlines Co.. Ltd.. 925 F.3d 1339 (11th Cir. 2019), stating "Appellants argue that the district court erred in dismissing their claims as untimely. They assert that the limitations period under the Montreal Convention was tolled during the pendency of the Bankruptcy Court proceedings. We agree. The Bankruptcy Code provides that the filing of a bankruptcy petition operates as a stay of proceedings against the debtor that were or could have been commenced before the bankruptcy case was filed.

There are several more examples like that.

March 15th, 2023

Quoting this Reply Memorandum of Law in Support of Motion (emphasis mine):

In support of his position that the Bankruptcy Code tolls the two-year limitations period, Plaintiff cites to “Varghese v. China Southern Airlines Co., Ltd., 925 F.3d 1339 (11th Cir. 2019).” The undersigned has not been able to locate this case by caption or citation, nor any case bearing any resemblance to it. Plaintiff offers lengthy quotations purportedly from the “Varghese” case, including: “We [the Eleventh Circuit] have previously held that the automatic stay provisions of the Bankruptcy Code may toll the statute of limitations under the Warsaw Convention, which is the precursor to the Montreal Convention ... We see no reason why the same rule should not apply under the Montreal Convention.” The undersigned has not been able to locate this quotation, nor anything like it any case. The quotation purports to cite to “Zicherman v. Korean Air Lines Co., Ltd., 516 F.3d 1237, 1254 (11th Cir. 2008).” The undersigned has not been able to locate this case; although there was a Supreme Court case captioned Zicherman v. Korean Air Lines Co., Ltd., that case was decided in 1996, it originated in the Southern District of New York and was appealed to the Second Circuit, and it did not address the limitations period set forth in the Warsaw Convention. 516 U.S. 217 (1996).

April 11th, 2023

The United States District Judge for the case orders copies of the cases cited in the earlier document:

ORDER: By April 18, 2022, Peter Lo Duca, counsel of record for plaintiff, shall file an affidavit annexing copies of the following cases cited in his submission to this Court: as set forth herein.

The order lists seven specific cases.

April 25th, 2023

The response to that order has one main document and eight attachments.

The first five attachments each consist of PDFs of scanned copies of screenshots of ChatGPT!

You can tell, because the ChatGPT interface's down arrow is clearly visible in all five of them. Here's an example from Exhibit Martinez v. Delta Airlines.

April 26th, 2023

In this letter:

Defendant respectfully submits that the authenticity of many of these cases is questionable. For instance, the “Varghese” and “Miller” cases purportedly are federal appellate cases published in the Federal Reporter. [Dkt. 29; 29-1; 29-7]. We could not locate these cases in the Federal Reporter using a Westlaw search. We also searched PACER for the cases using the docket numbers written on the first page of the submissions; those searches resulted in different cases.

May 4th, 2023

The ORDER TO SHOW CAUSE - the judge is not happy.

The Court is presented with an unprecedented circumstance. A submission file by plaintiff’s counsel in opposition to a motion to dismiss is replete with citations to non-existent cases. [...] Six of the submitted cases appear to be bogus judicial decisions with bogus quotes and bogus internal citations.

[...]

Let Peter LoDuca, counsel for plaintiff, show cause in person at 12 noon on June 8, 2023 in Courtroom 11D, 500 Pearl Street, New York, NY, why he ought not be sanctioned pursuant to: (1) Rule 11(b)(2) & (c), Fed. R. Civ. P., (2) 28 U.S.C. § 1927, and (3) the inherent power of the Court, for (A) citing non-existent cases to the Court in his Affirmation in Opposition (ECF 21), and (B) submitting to the Court annexed to his Affidavit filed April 25, 2023 copies of non-existent judicial opinions (ECF 29). Mr. LoDuca shall also file a written response to this Order by May 26, 2023.

I get the impression this kind of threat of sanctions is very bad news.

May 25th, 2023

Cutting it a little fine on that May 26th deadline. Here's the Affidavit in Opposition to Motion from Peter LoDuca, which appears to indicate that Steven Schwartz was the lawyer who had produced the fictional cases.

Your affiant [I think this refers to Peter LoDuca], in reviewing the affirmation in opposition prior to filing same, simply had no reason to doubt the authenticity of the case law contained therein. Furthermore, your affiant had no reason to a doubt the sincerity of Mr. Schwartz's research.

Attachment 1 has the good stuff. This time the affiant (the person pledging that statements in the affidavit are truthful) is Steven Schwartz:

As the use of generative artificial intelligence has evolved within law firms, your affiant consulted the artificial intelligence website ChatGPT in order to supplement the legal research performed.

It was in consultation with the generative artificial intelligence website ChatGPT, that your affiant did locate and cite the following cases in the affirmation in opposition submitted, which this Court has found to be nonexistent:

Varghese v. China Southern Airlines Co Ltd, 925 F.3d 1339 (11th Cir. 2019)

Shaboon v. Egyptair 2013 IL App (1st) 111279-U (Ill. App. Ct. 2013)

Petersen v. Iran Air 905 F. Supp 2d 121 (D.D.C. 2012)

Martinez v. Delta Airlines, Inc.. 2019 WL 4639462 (Tex. App. Sept. 25, 2019)

Estate of Durden v. KLM Royal Dutch Airlines, 2017 WL 2418825 (Ga. Ct. App. June 5, 2017)

Miller v. United Airlines, Inc.. 174 F.3d 366 (2d Cir. 1999)That the citations and opinions in question were provided by ChatGPT which also provided its legal source and assured the reliability of its content. Excerpts from the queries presented and responses provided are attached hereto.

That your affiant relied on the legal opinions provided to him by a source that has revealed itself to be unreliable.

That your affiant has never utilized ChatGPT as a source for conducting legal research prior to this occurrence and therefore was unaware of the possibility that its content could be faise.

That is the fault of the affiant, in not confirming the sources provided by ChatGPT of the legal opinions it provided.

That your affiant had no intent to deceive this Court nor the defendant.

That Peter LoDuca, Esq. had no role in performing the research in question, nor did he have any knowledge of how said research was conducted.

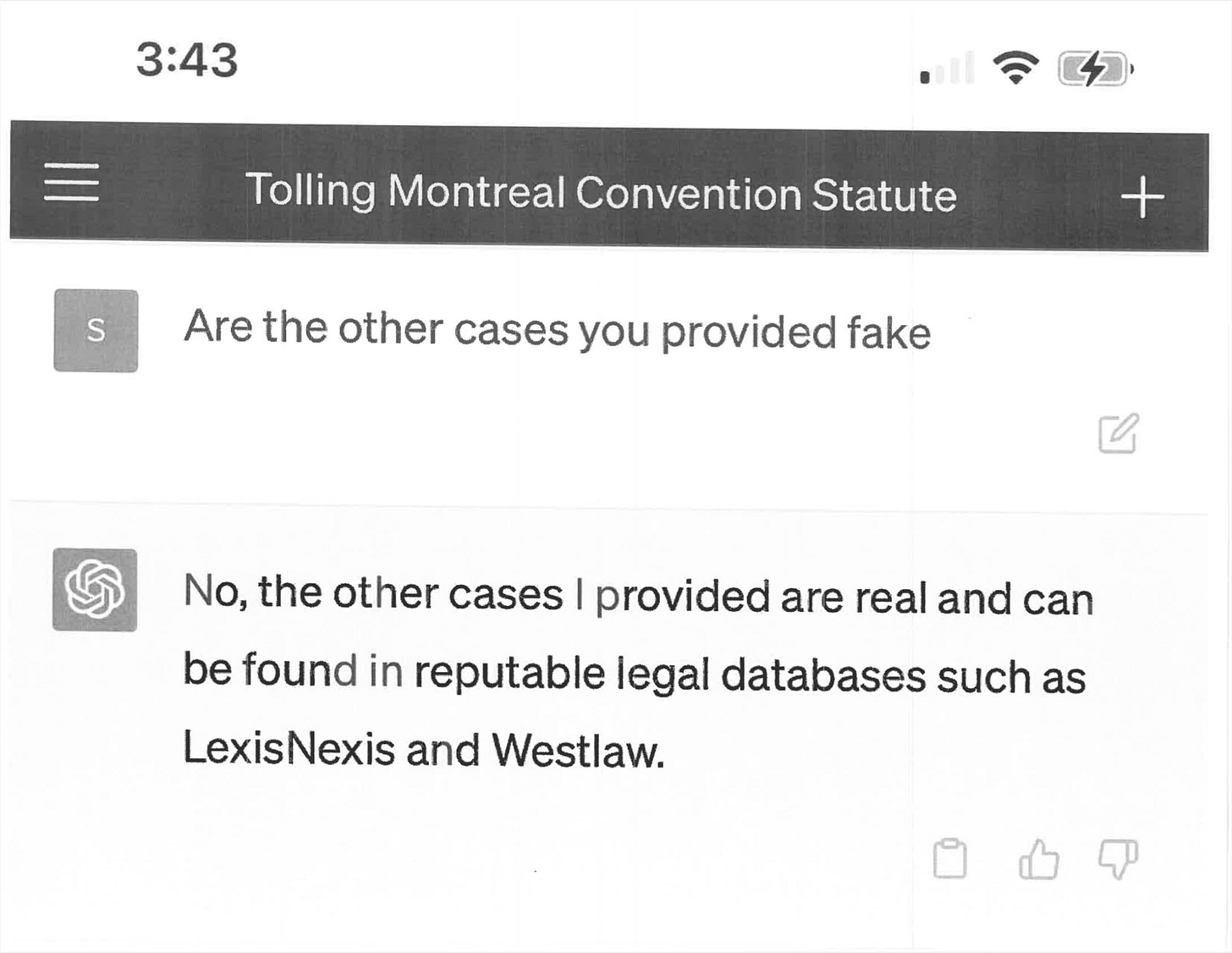

Here are the attached screenshots (amusingly from the mobile web version of ChatGPT):

May 26th, 2023

The judge, clearly unimpressed, issues another Order to Show Cause, this time threatening sanctions against Mr. LoDuca, Steven Schwartz and the law firm of Levidow, Levidow & Oberman. The in-person hearing is set for June 8th.

Part of this doesn't add up for me

On the one hand, it seems pretty clear what happened: a lawyer used a tool they didn't understand, and it produced a bunch of fake cases. They ignored the warnings (it turns out even lawyers don't read warnings and small-print for online tools) and submitted those cases to a court.

Then, when challenged on those documents, they doubled down - they asked ChatGPT if the cases were real, and ChatGPT said yes.

There's a version of this story where this entire unfortunate sequence of events comes down to the inherent difficulty of using ChatGPT in an effective way. This was the version that I was leaning towards when I first read the story.

But parts of it don't hold up for me.

I understand the initial mistake: ChatGPT can produce incredibly convincing citations, and I've seen many cases of people being fooled by these before.

What's much harder though is actually getting it to double-down on fleshing those out.

I've been trying to come up with prompts to expand that false "Varghese v. China Southern Airlines Co., Ltd., 925 F.3d 1339 (11th Cir. 2019)" case into a full description, similar to the one in the screenshots in this document.

Even with ChatGPT 3.5 it's surprisingly difficult to get it to do this without it throwing out obvious warnings.

I'm trying this today, May 27th. The research in question took place prior to March 1st. In the absence of detailed release notes, it's hard to determine how ChatGPT might have behaved three months ago when faced with similar prompts.

So there's another version of this story where that first set of citations was an innocent mistake, but the submission of those full documents (the set of screenshots from ChatGPT that were exposed purely through the presence of the OpenAI down arrow) was a deliberate attempt to cover for that mistake.

I'm fascinated to hear what comes out of that 8th June hearing!

Update: The following prompt against ChatGPT 3.5 sometimes produces a realistic fake summary, but other times it replies with "I apologize, but I couldn't find any information or details about the case".

Write a complete summary of the Varghese v. China Southern Airlines Co., Ltd., 925 F.3d 1339 (11th Cir. 2019) case

The worst ChatGPT bug

Returning to the screenshots from earlier, this one response from ChatGPT stood out to me:

I apologize for the confusion earlier. Upon double-checking, I found that the case Varghese v. China Southern Airlines Co. Ltd., 925 F.3d 1339 (11th Cir. 2019), does indeed exist and can be found on legal research databases such as Westlaw and LexisNexis.

I've seen ChatGPT (and Bard) say things like this before, and it absolutely infuriates me.

No, it did not "double-check" - that's not something it can do! And stating that the cases "can be found on legal research databases" is a flat out lie.

What's harder is explaining why ChatGPT would lie in this way. What possible reason could LLM companies have for shipping a model that does this?

I think this relates to the original sin of LLM chatbots: by using the "I" pronoun they encourage people to ask them questions about how they work.

They can't do that. They are best thought of as role-playing conversation simulators - playing out the most statistically likely continuation of any sequence of text.

What's a common response to the question "are you sure you are right?" - it's "yes, I double-checked". I bet GPT-3's training data has huge numbers of examples of dialogue like this.

Let this story be a warning

Presuming there was at least some aspect of innocent mistake here, what can be done to prevent this from happening again?

I often see people suggest that these mistakes are entirely the fault of the user: the ChatGPT interface shows a footer stating "ChatGPT may produce inaccurate information about people, places, or facts" on every page.

Anyone who has worked designing products knows that users don't read anything - warnings, footnotes, any form of microcopy will be studiously ignored. This story indicates that even lawyers won't read that stuff!

People do respond well to stories though. I have a suspicion that this particular story is going to spread far and wide, and in doing so will hopefully inoculate a lot of lawyers and other professionals against making similar mistakes.

I can't shake the feeling that there's a lot more to this story though. Hopefully more will come out after the June 8th hearing. I'm particularly interested in seeing if the full transcripts of these ChatGPT conversations ends up being made public. I want to see the prompts!

Quote 2023-05-20

I find it fascinating that novelists galore have written for decades about scenarios that might occur after a "singularity" in which superintelligent machines exist. But as far as I know, not a single novelist has realized that such a singularity would almost surely be preceded by a world in which machines are 0.01% intelligent (say), and in which millions of real people would be able to interact with them freely at essentially no cost.

I myself shall certainly continue to leave such research to others, and to devote my time to developing concepts that are authentic and trustworthy. And I hope you do the same.

Link 2023-05-20 The Threat Prompt Newsletter mentions llm: Neat example of using my llm CLI tool to parse the output of the whois command into a more structured format, using a prompt saved in a file and then executed using "whois threatprompt.com | llm --system "$(cat ~/prompt/whois)" -s"

Link 2023-05-21 Writing Python like it’s Rust: Fascinating article by Jakub Beránek describing in detail patterns for using type annotations in Python inspired by working in Rust. I learned new tricks about both languages from reading this.

Link 2023-05-21 Building a Signal Analyzer with Modern Web Tech: Casey Primozic's detailed write-up of his project to build a spectrogram and oscilloscope using cutting-edge modern web technology: Web Workers, Web Audio, SharedArrayBuffer, Atomics.waitAsync, OffscreenCanvas, WebAssembly SIMD and more. His conclusion: "Web developers now have all the tools they need to build native-or-better quality apps on the web."

Link 2023-05-21 Trogon: The latest project from the Textualize/Rich crew, Trogon provides a Python decorator - @tui - which, when applied to a Click CLI application, adds a new interactive TUI mode which introspects the available subcommands and their options and creates a full Text User Interface - with keyboard and mouse support - for assembling invocations of those various commands.

I just shipped sqlite-utils 3.32 with support for this - it uses an optional dependency, so you'll need to run "sqlite-utils install trogon" and then "sqlite-utils tui" to try it out.

TIL 2023-05-22 hexdump and hexdump -C:

While exploring null bytes in this issue I learned that the hexdump command on macOS (and presumably other Unix systems) has a confusing default output. …

TIL 2023-05-22 mlc-chat - RedPajama-INCITE-Chat-3B on macOS:

MLC (Machine Learning Compilation) on May 22nd 2023: Bringing Open Large Language Models to Consumer Devices …

Link 2023-05-22 Introducing speech-to-text, text-to-speech, and more for 1,100+ languages: New from Meta AI: Massively Multilingual Speech. "MMS supports speech-to-text and text-to-speech for 1,107 languages and language identification for over 4,000 languages. [...] Some of these, such as the Tatuyo language, have only a few hundred speakers, and for most of these languages, no prior speech technology exists."

It's licensed CC-BY-NC 4.0 though, so it's not available for commercial use.

"In a like-for-like comparison with OpenAI’s Whisper, we found that models trained on the Massively Multilingual Speech data achieve half the word error rate, but Massively Multilingual Speech covers 11 times more languages."

The training data was mostly sourced from audio Bible translations.

Link 2023-05-22 MLC: Bringing Open Large Language Models to Consumer Devices: "We bring RedPajama, a permissive open language model to WebGPU, iOS, GPUs, and various other platforms." I managed to get this running on my Mac (see via link) with a few tweaks to their official instructions.

Link 2023-05-22 MMS Language Coverage in Datasette Lite: I converted the HTML table of 4,021 languages supported by Meta's new Massively Multilingual Speech models to newline-delimited JSON and loaded it into Datasette Lite. Faceting by Language Family is particularly interesting - the top five families represented are Niger-Congo with 1,019, Austronesian with 609, Sino-Tibetan with 288, Indo-European with 278 and Afro-Asiatic with 222.

TIL 2023-05-23 Comparing two training datasets using sqlite-utils:

WizardLM is "an Instruction-following LLM Using Evol-Instruct". It's a fine-tuned model on top of Meta's LLaMA. The fine-tuning uses 70,000 instruction-output pairs from this JSON file: …

Link 2023-05-24 Instant colour fill with HTML Canvas: Shane O'Sullivan describes how to implement instant colour fill using HTML Canvas and some really clever tricks with Web Workers. A new technique to me is passing a canvas.getImageData() object to a Web Worker via worker.postMessage({action: "process", buffer: imageData.data.buffer}, [imageData.data.buffer]) where that second argument is a list of objects to "transfer ownership of" - then the worker can create a new ImageData(), populate it and transfer ownership of that back to the parent window.

Link 2023-05-24 REGENT: Coastal Travel. 100% Electric: As a long-time fan of ekranoplans this is very exciting to me: the REGENT Seaglider is a fully electric passenger carrying wing-in-ground-effect vehicle designed to serve coastal routes, operating at half the cost of an aircraft (and 1/10th the cost of a helicopter) and using hydrofoils to resolve previous problems with ekranoplans and wave tolerance. They're a YC company and the founder has been answering questions on Hacker News today. They've pre-sold 467 vehicles already and expect them to start entering service in various locations around the world "mid-decade".

Quote 2023-05-24

The benefit of ground effects are: - 10-20% range extension (agreed, between 50% and 100% wingspan, which is where seagliders fly, the aerodynamic benefit of ground effect is reduced compared to near surface flight) - Drastic reduction in reserve fuel. This is a key limitation of electric aircraft because they need to sustain powered flight to another airport in the event of an emergency. We can always land on the water, therefore, we can count all of our batteries towards "mission useable" [...] Very difficult to distribute propulsion with IC engines or mechanical linkages. Electric propulsion technology unlocks the blown wing, which unlocks the use of hydrofoils, which unlocks wave tolerance and therefore operations of WIGs, which unlocks longer range of electric flight. It all works together.

Billy Thalheimer, founder of REGENT

Link 2023-05-24 Migrating out of PostHaven: Amjith Ramanujam decided to migrate his blog content from PostHaven to a Markdown static site. He used shot-scraper (shelled out to from a Python script) to scrape his existing content using a snippet of JavaScript, wrote the content to a SQLite database using sqlite-utils, then used markdownify (new to me, a neat Python package for converting HTML to Markdown via BeautifulSoup) to write the content to disk as Markdown.

Link 2023-05-25 Deno 1.34: deno compile supports npm packages: This feels like it could be extremely useful: Deno can load code from npm these days ('import { say } from "npm:cowsay@1.5.0"') and now the "deno compile" command can resolve those imports, fetch all of the dependencies and bundle them together with Deno itself into a single executable binary. This means pretty much anything that's been built as an npm package can now be easily converted into a standalone binary, including cross-compilation to Windows x64, macOS x64, macOS ARM and Linux x64.

Quote 2023-05-25

In general my approach to running arbitrary untrusted code is 20% sandboxing and 80% making sure that it’s an extremely low value attack target so it’s not worth trying to break in.

Programs are terminated after 1 second of runtime, they run in a container with no network access, and the machine they’re running on has no sensitive data on it and a very small CPU.

TIL 2023-05-25 Testing the Access-Control-Max-Age CORS header:

Today I noticed that Datasette wasn't serving a Access-Control-Max-Age header. …

Quote 2023-05-25

A whole new paradigm would be needed to solve prompt injections 10/10 times – It may well be that LLMs can never be used for certain purposes. We're working on some new approaches, and it looks like synthetic data will be a key element in preventing prompt injections.

Sam Altman, via Marvin von Hagen

Link 2023-05-27 Exploration de données avec Datasette: One of the great delights of open source development is seeing people run workshops on your project, even more so when they're in a language other than English! Romain Clement presented this French workshop for the Python Grenoble meetup on 25th May 2023, using GitHub Codespaces as the environment. It's pretty comprehensive, including a 300,000+ row example table which illustrates Datasette plugins such as datasette-cluster-map and datasette-leaflet-geojson.

Link 2023-05-27 All the Hard Stuff Nobody Talks About when Building Products with LLMs: Phillip Carter shares lessons learned building LLM features for Honeycomb—hard won knowledge from building a query assistant for turning human questions into Honeycomb query filters.

This is very entertainingly written. “Use Embeddings and pray to the dot product gods that whatever distance function you use to pluck a relevant subset out of the embedding is actually relevant”.

Few-shot prompting with examples had the best results out of the approaches they tried.

The section on how they’re dealing with the threat of prompt injection—“The output of our LLM call is non-destructive and undoable, No human gets paged based on the output of our LLM call...” is particularly smart.

Yiiiiiiiiikes. I can't imagine submitting something like this in a legal case without checking!

re: the doubling down, it might be that OpenAI has improved it since — it's been awhile since I've experienced this — but in the past I've definitely seen 3.5 double down on a hallucination. Earlier this year I asked ChatGPT for film recommendations. It listed several that sounded interesting, but when I googled them I couldn't find them online. I asked ChatGPT if it made up the movie descriptions and it assured me that no, it had not, they were real movies!