GPT-5 Thinking in ChatGPT (aka Research Goblin) is shockingly good at search

And why the $1.5bn Anthropic books settlement may count as a win for Anthropic

In this newsletter:

GPT-5 Thinking in ChatGPT (aka Research Goblin) is shockingly good at search

Plus 7 links and 3 quotations and 1 note

GPT-5 Thinking in ChatGPT (aka Research Goblin) is shockingly good at search - 2025-09-06

"Don't use chatbots as search engines" was great advice for several years... until it wasn't.

I wrote about how good OpenAI's o3 was at using its Bing-backed search tool back in April. GPT-5 feels even better.

I've started calling it my Research Goblin. I can assign a task to it, no matter how trivial or complex, and it will do an often unreasonable amount of work to search the internet and figure out an answer.

This is excellent for satisfying curiosity, and occasionally useful for more important endeavors as well.

I always run my searches by selecting the "GPT-5 Thinking" model from the model picker - in my experience this leads to far more comprehensive (albeit much slower) results.

Here are some examples from just the last couple of days. Every single one of them was run on my phone, usually while I was doing something else. Most of them were dictated using the iPhone voice keyboard, which I find faster than typing. Plus, it's fun to talk to my Research Goblin.

Bouncy travelators

They used to be rubber bouncy travelators at Heathrow and they were really fun, have all been replaced by metal ones now and if so, when did that happen?

I was traveling through Heathrow airport pondering what had happened to the fun bouncy rubber travelators.

Here's what I got. Research Goblin narrowed it down to some time between 2014-2018 but, more importantly, found me this delightful 2024 articleby Peter Hartlaub in the San Francisco Chronicle with a history of the SFO bouncy walkways, now also sadly retired.

Identify this building

Identify this building in reading

This is a photo I snapped out of the window on the train. It thought for 1m4s and correctly identified it as The Blade.

Starbucks UK cake pops

Starbucks in the UK don't sell cake pops! Do a deep investigative dive

The Starbucks in Exeter railway station didn't have cake pops, and the lady I asked didn't know what they were.

Here's the result. It turns out Starbucks did launch cake pops in the UK in September 2023but they aren't available at all outlets, in particular the licensed travel locations such as the one at Exeter St Davids station.

I particularly enjoyed how it established definitive proof by consulting the nutrition and allergen guide PDF on starbucks.co.uk, which does indeed list both the Birthday Cake Pop (my favourite) and the Cookies and Cream one (apparently discontinued in the USA, at least according to r/starbucks).

Britannica to seed Wikipedia

Someone on hacker News said:

> I was looking at another thread about how Wikipedia was the best thing on the internet. But they only got the head start by taking copy of Encyclopedia Britannica and everything else

Find what they meant by that

The result. It turns out Wikipedia did seed itself with content from the out-of-copyright 1911 Encyclopædia Britannica... but that project took place in 2006, five years after Wikipedia first launched in 2001.

I asked:

What is the single best article I can link somebody to that explains the 1911 Britannica thing

And it pointed me to Wikipedia:WikiProject Encyclopaedia Britannica which includes a detailed explanation and a link to the 13,000 pages still tagged with the template from that project. I posted what I found in a comment.

Notably (for me anyway) I didn't feel the need to disclose my use of ChatGPT in finding that information - at this point that feels a little like disclosing that I ran a Google search.

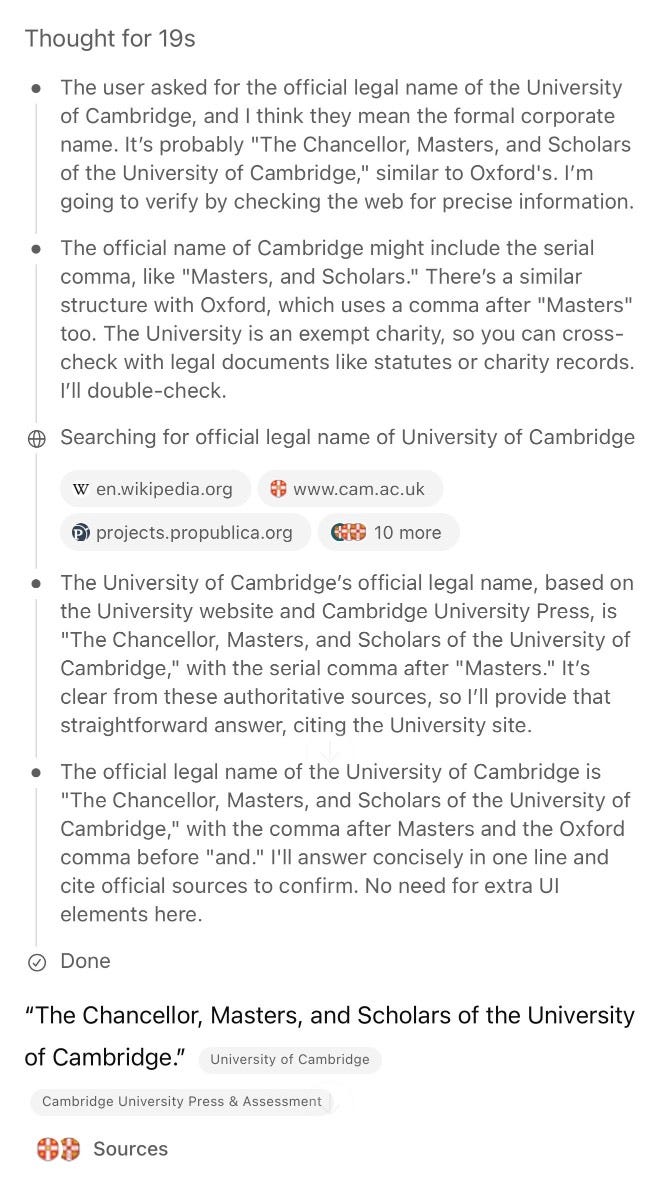

Official name for the University of Cambridge

What is the official legal name of the university of Cambridge?

Here's the context for that one. It thought for 19 seconds - the thinking trace reveals it knew the answer but wanted to confirm it. It answered:

“The Chancellor, Masters, and Scholars of the University of Cambridge.” University of Cambridge, Cambridge University Press & Assessment

That first link gave me the citation I needed in order to be sure this was right.

Since this is my shortest example, here's a screenshot of the expanded "Thought for 19s" panel. I always expand the thoughts - seeing how it pulled together its answer is crucial for evaluating if the answer is likely to be useful or not.

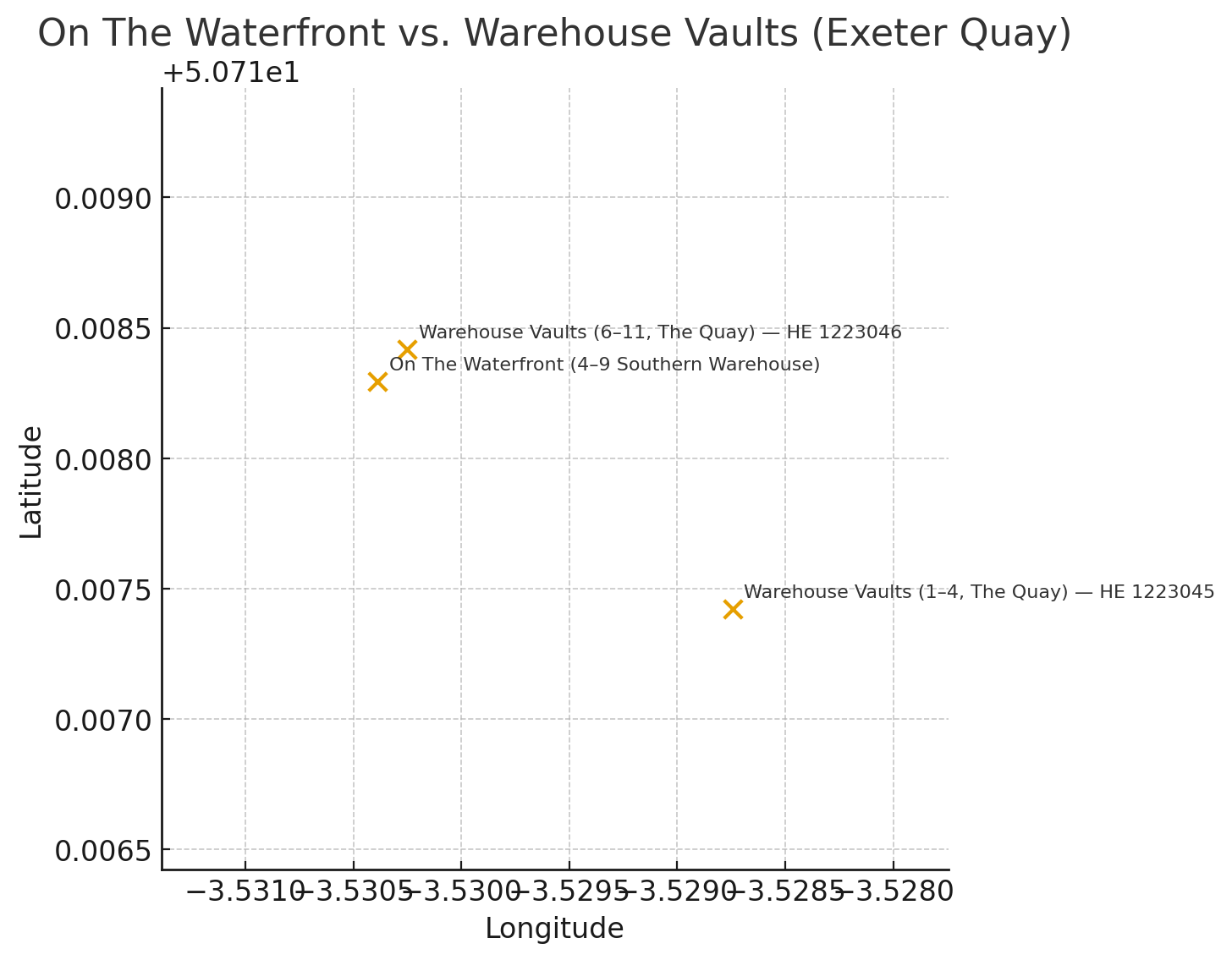

History of the caverns in Exeter quay

Research On the waterfront restaurant in Exeter, is it dug into the cliffs somehow? History of the building, who built it, why and how

We were out to dinner here and noticed that the interior of the restaurant appeared to be a space dug into the cliff, which piqued my interest.

This was the ChatGPT session that inspired the Research Goblin nickname. It just kept on digging!

The first reply took 2m40s and confirmed that yes, these quay buildings were carved into the red sandstone cliff in the 1820s-1830s.

ChatGPT with GPT-5 really likes to suggest additional steps it can take. In this case:

If you’d like, I can dig up the exact Historic England entry that covers the “Southern Warehouse” address and overlay it on a map of the vaults.

I often say "yes" purely out of curiosity to see what it will do next, and the offer to "overlay it on a map" was irresistible, like how would it even do that?

It did a ton of extra searches, found latitude and longitude coordinates for the restaurant (from Wikimedia Commons) and the warehouse buildings (from National Heritage List for England via Wikipedia), showed me that data in a table and then used Python to render this image:

Yeah, so that one is a bit of a miss!

But... the underlying research chain, as always, provided fascinating. I noticed that at one point it had searched for "Exeter Quay vaults map PDF", which inspired my next question:

Did you find any PDF diagrams, showing the tunnels into the cliff

2m20s later it had found a planning documentand a letting brochure, both PDFs, but noted that neither of them had the diagram I wanted. Evidently it actually "reads" the PDFs it finds as part of its activities, since it appears to have knowledge of the images contained within them as well as the text.

It concluded with this intriguing note:

There’s strong evidence that a detailed plan/section does exist in the Exeter Archaeology report often cited by planners—“Archaeological, Historical and Conservation Study of the Exeter Canal Basin” (Exeter Archaeology Report 00.18, 2000)—but it isn’t online; it’s just cited in planning documents. You’d likely need to request it via the Devon Heritage Centre/Historic Environment Record.

If you want, I can keep hunting specialist archives for a digitized copy or a different report that includes the vault layouts.

Obviously I said "Yes do that" and 2m24s later it chucked out a whole host of extra links, an apology that it still hadn't found a detailed plan diagram and, under the section heading "What I recommend next (actionable + exact ask)":

If you’re happy, I can draft an email you can paste to the right archivists. [...]

Subject: Exeter Quayside cliff vaults – request for plan/section drawings Hello,

I’m researching the cliff-cut vaults behind The Quay (On The Waterfront / Kings Wharf). Could you supply scans or copies of any plans/sections/diagrams of these vaults—especially those in Exeter Archaeology Report 00.18 (2000)(Archaeological, Historical and Conservation Study of the Exeter Canal Basin) and any EMAFU/Exeter Archaeology drawings for “The Quay” showing the vault/tunnel layouts cut into the red sandstone cliff?

For reference: NHLE list entries 1223045 (1–4 Warehouse Vaults), 1223046 (6–11 Warehouse Vaults), and 1223114 (14–25 Vaults).

Many thanks!

It's a good thing my Research Goblin doesn't have the ability to send email (which we already know is very risky business) or at this point I wouldn't be surprised if it started autonomously bothering archivists on my behalf!

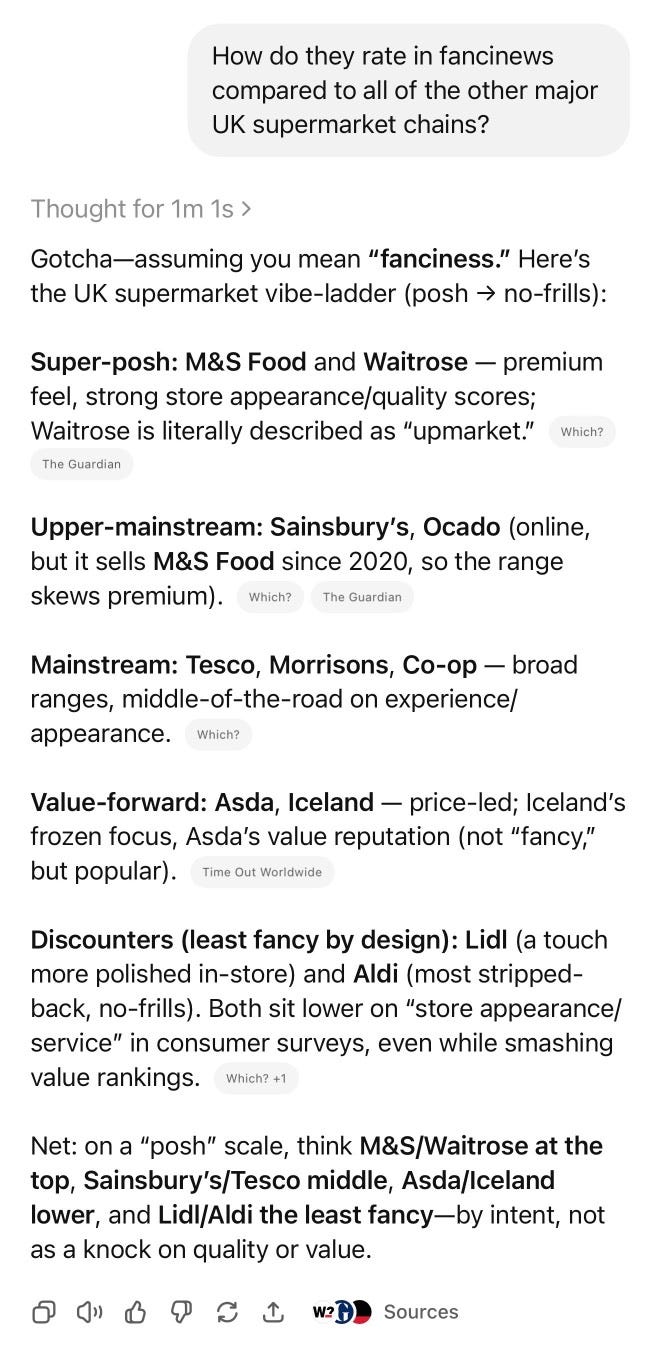

Aldi vs Lidl

In UK supermarket terms compare lidl and aldi

I saw an Aldi and a Lidl next to each other in Exeter and was curious as to how they compare. In this case my Research Goblin went way deeper than I had intended, thinking for 1m25s about market share and price surveys and loyalty schemes and answering with ten paragraphs of detailed analysis.

I replied:

Yeah, I just wanted the basic vibes like is one fancier than the other?

It thought for 6 seconds, didn't run any additional searches and replied:

Short version: neither is “fancy” — both are no-frills discounters — but Lidl often feels a touch more polished, while Aldi feels a touch more bare-bones. [...]

Since I was now enjoying having my Goblin express judgement over British supermarket chains I said:

How do they rate in fancinews compared to all of the other major UK supermarket chains?

(That was a typo for "fanciness" which it figured out.)

It thought for another 1m1s, ran 7 searches, consulted 27 URLs and gave me back a ranking that looked about right to me.

AI labs scanning books for training data

Anthropic bought lots of physical books and cut them up and scan them for training data. Do any other AI labs do the same thing?

Relevant to today's big story. Research Goblin was unable to find any news stories or other evidence that any labs other than Anthropic are engaged in large scale book scanning for training data. That's not to say it isn't happening, but it's happening very quietly if that's the case.

GPT-5 for search feels competent

The word that best describes how I feel about GPT-5 search is that it feels competent.

I've thrown all sorts of things at it over the last few weeks and it rarely disappoints me. It almost always does better than if I were to dedicate the same amount of time to manually searching myself, mainly because it's much faster at running searches and evaluating the results than I am.

I particularly love that it works so well on mobile. I used to reserve my deeper research sessions to a laptop where I could open up dozens of tabs. I'll still do that for higher stakes activities but I'm finding the scope of curiosity satisfaction I can perform on the go with just my phone has increased quite dramatically.

I've mostly stopped using OpenAI's Deep Research feature, because ChatGPT search now gives me the results I'm interested in far more quickly for most queries.

As a developer who builds software on LLMs I see ChatGPT search as the gold standard for what can be achieved using tool calling combined with chain-of-thought. Techniques like RAG are massively more effective if you can reframe them as several levels of tool calling with a carefully selected set of powerful search tools.

The way that search tool integrates with reasoning is key, because it allows GPT-5 to execute a search, reason about the results and then execute follow-up searches - all as part of that initial "thinking" process.

Anthropic call this ability interleaved thinkingand it's also supported by the OpenAI Responses API.

Tips for using search in ChatGPT

As with all things AI, GPT-5 search rewards intuition gathered through experience. Any time a curious thought pops into my head I try to catch it and throw it at my Research Goblin. If it's something I'm certain it won't be able to handle then even better! I can learn from watching it fail.

I've been trying out hints like "go deep" which seem to trigger a more thorough research job. I enjoy throwing those at shallow and unimportant questions like the UK Starbucks cake pops one just to see what happens!

You can throw questions at it which have a single, unambiguous answer - but I think questions which are broader and don't have a "correct" answer can be a lot more fun. The UK supermarket rankings above are a great example of that.

Since I love a questionable analogy for LLMs Research Goblin is... well, it's a goblin. It's very industrious, not quite human and not entirely trustworthy. You have to be able to outwit it if you want to keep it gainfully employed.

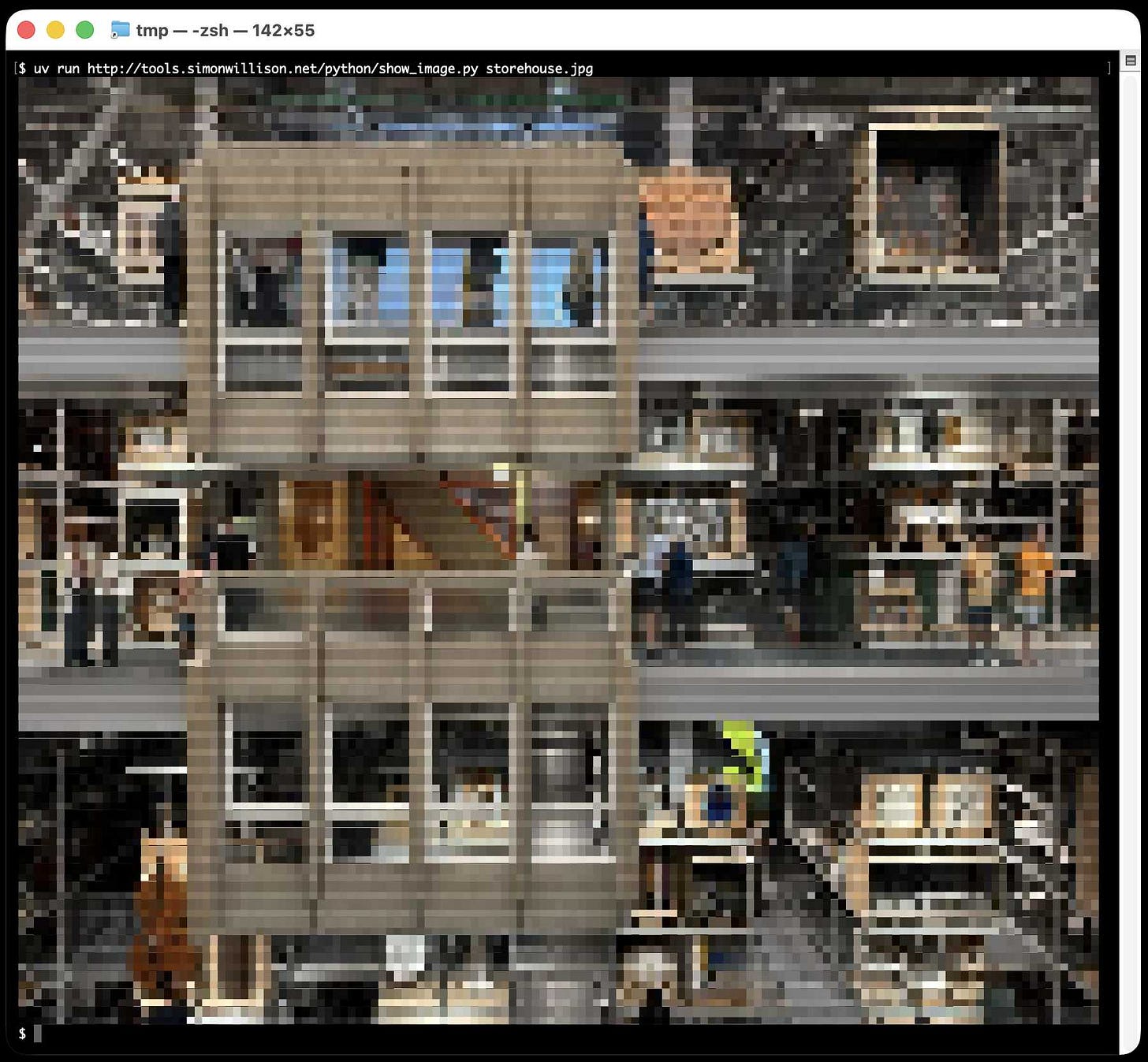

Link 2025-09-02 Rich Pixels:

Neat Python library by Darren Burns adding pixel image support to the Rich terminal library, using tricks to render an image using full or half-height colored blocks.

Here's the key trick - it renders Unicode ▄ (U+2584, "lower half block") characters after setting a foreground and background color for the two pixels it needs to display.

I got GPT-5 to vibe code up a show_image.pyterminal command which resizes the provided image to fit the width and height of the current terminal and displays it using Rich Pixels. That script is here, you can run it with uv like this:

uv run https://tools.simonwillison.net/python/show_image.py \

image.jpgHere's what I got when I ran it against my V&A East Storehouse photo from this post:

Link 2025-09-02 Making XML human-readable without XSLT:

In response to the recent discourse about XSLT support in browsers, Jake Archibald shares a new-to-me alternative trick for making an XML document readable in a browser: adding the following element near the top of the XML:

<script

xmlns="http://www.w3.org/1999/xhtml"

src="script.js" defer="" />That script.js will then be executed by the browser, and can swap out the XML with HTML by creating new elements using the correct namespace:

const htmlEl = document.createElementNS(

'http://www.w3.org/1999/xhtml',

'html',

);

document.documentElement.replaceWith(htmlEl);

// Now populate the new DOMLink 2025-09-03 gov.uscourts.dcd.223205.1436.0_1.pdf:

Here's the 230 page PDF ruling on the 2023 United States v. Google LLC federal antitrust case) - the case that could have resulted in Google selling off Chrome and cutting most of Mozilla's funding.

I made it through the first dozen pages - it's actually quite readable.

It opens with a clear summary of the case so far, bold highlights mine:

Last year, this court ruled that Defendant Google LLC had violated Section 2 of the Sherman Act: “Google is a monopolist, and it has acted as one to maintain its monopoly.” The court found that, for more than a decade, Google had entered into distribution agreements with browser developers, original equipment manufacturers, and wireless carriers to be the out-of-the box, default general search engine (“GSE”) at key search access points. These access points were the most efficient channels for distributing a GSE, and Google paid billions to lock them up. The agreements harmed competition. They prevented rivals from accumulating the queries and associated data, or scale, to effectively compete and discouraged investment and entry into the market. And they enabled Google to earn monopoly profits from its search text ads, to amass an unparalleled volume of scale to improve its search product, and to remain the default GSE without fear of being displaced. Taken together, these agreements effectively “froze” the search ecosystem, resulting in markets in which Google has “no true competitor.”

There's an interesting generative AI twist: when the case was first argued in 2023 generative AI wasn't an influential issue, but more recently Google seem to be arguing that it is an existential threat that they need to be able to take on without additional hindrance:

The emergence of GenAl changed the course of this case. No witness at the liability trial testified that GenAl products posed a near-term threat to GSEs. The very first witness at the remedies hearing, by contrast, placed GenAl front and center as a nascent competitive threat. These remedies proceedings thus have been as much about promoting competition among GSEs as ensuring that Google’s dominance in search does not carry over into the GenAlI space. Many of Plaintiffs’ proposed remedies are crafted with that latter objective in mind.

I liked this note about the court's challenges in issuing effective remedies:

Notwithstanding this power, courts must approach the task of crafting remedies with a healthy dose of humility. This court has done so. It has no expertise in the business of GSEs, the buying and selling of search text ads, or the engineering of GenAl technologies. And, unlike the typical case where the court’s job is to resolve a dispute based on historic facts, here the court is asked to gaze into a crystal ball and look to the future. Not exactly a judge’s forte.

On to the remedies. These ones looked particularly important to me:

Google will be barred from entering or maintaining any exclusive contract relating to the distribution of Google Search, Chrome, Google Assistant, and the Gemini app. [...]

Google will not be required to divest Chrome; nor will the court include a contingent divestiture of the Android operating system in the final judgment. Plaintiffs overreached in seeking forced divesture of these key assets, which Google did not use to effect any illegal restraints. [...]

I guess Perplexity won't be buying Chrome then!

Google will not be barred from making payments or offering other consideration to distribution partners for preloading or placement of Google Search, Chrome, or its GenAl products. Cutting off payments from Google almost certainly will impose substantial —in some cases, crippling— downstream harms to distribution partners, related markets, and consumers, which counsels against a broad payment ban.

That looks like a huge sigh of relief for Mozilla, who were at risk of losing a sizable portion of their income if Google's search distribution revenue were to be cut off.

Link 2025-09-04 Beyond Vibe Coding:

Back in May I wrote Two publishers and three authors fail to understand what “vibe coding” means where I called out the authors of two forthcoming books on "vibe coding" for abusing that term to refer to all forms of AI-assisted development, when Not all AI-assisted programming is vibe coding based on the original Karpathy definition.

I'll be honest: I don't feel great about that post. I made an example of those two books to push my own agenda of encouraging "vibe coding" to avoid semantic diffusion but it felt (and feels) a bit mean.

... but maybe it had an effect? I recently spotted that Addy Osmani's book "Vibe Coding: The Future of Programming" has a new title, it's now called "Beyond Vibe Coding: From Coder to AI-Era Developer".

This title is so much better. Setting aside my earlier opinions, this positioning as a book to help people go beyond vibe coding and use LLMs as part of a professional engineering practice is a really great hook!

From Addy's new description of the book:

Vibe coding was never meant to describe all AI-assisted coding. It's a specific approach where you don't read the AI's code before running it. There's much more to consider beyond the prototype for production systems. [...]

AI-assisted engineering is a more structured approach that combines the creativity of vibe coding with the rigor of traditional engineering practices. It involves specs, rigor and emphasizes collaboration between human developers and AI tools, ensuring that the final product is not only functional but also maintainable and secure.

Amazon lists it as releasing on September 23rd. I'm looking forward to it.

Note 2025-09-04

Any time I share my collection of tools built using vibe coding and AI-assisted development (now at 124, here's the definitive list) someone will inevitably complain that they're mostly trivial.

A lot of them are! Here's a list of some that I think are genuinely useful and worth highlighting:

OCR PDFs and images directly in your browser. This is the tool that started the collection, and I still use it on a regular basis. You can open any PDF in it (even PDFs that are just scanned images with no embedded text) and it will extract out the text so you can copy-and-paste it. It uses PDF.js and Tesseract.js to do that entirely in the browser. I wrote about how I originally built that here.

Annotated Presentation Creator - this one is so useful. I use it to turn talks that I've given into full annotated presentations, where each slide is accompanied by detailed notes. I have 29 blog entries like that now and most of them were written with the help of this tool. Here's how I built that, plus follow-up prompts I used to improve it.

Image resize, crop, and quality comparison - I use this for every single image I post to my blog. It lets me drag (or paste) an image onto the page and then shows me a comparison of different sizes and quality settings, each of which I can download and then upload to my S3 bucket. I recently added a slightly janky but mobile-accessible cropping tool as well. Prompts.

Social Media Card Cropper - this is an even more useful image tool. Bluesky, Twitter etc all benefit from a 2x1 aspect ratio "card" image. I built this custom tool for creating those - you can paste in an image and crop and zoom it to the right dimensions. I use this all the time. Prompts.

SVG to JPEG/PNG - every time I publish an SVG of a pelican riding a bicycle I use this tool to turn that SVG into a JPEG or PNG. Prompts.

Encrypt / decrypt message - I often run workshops where I want to distribute API keys to the workshop participants. This tool lets me encrypt a message with a passphrase, then share the resulting URL to the encrypted message and tell people (with a note on a slide) how to decrypt it. Prompt.

Jina Reader - enter a URL, get back a Markdown version of the page. It's a thin wrapper over the Jina Reader API, but it's useful because it adds a "copy to clipboard" button which means it's one of the fastest way to turn a webpage into data on a clipboard on my mobile phone. I use this several times a week. Prompts.

llm-prices.com - a pricing comparison and token pricing calculator for various hosted LLMs. This one started out as a tool but graduated to its own domain name. Here's the prompting development history.

Open Sauce 2025 - an unofficial schedule for the Open Sauce conference, complete with option to export to ICS plus a search tool and now-and-next. I built this entirely on my phoneusing OpenAI Codex, including scraping the official schedule - full details here.

Hacker News Multi-Term Histogram - compare search terms on Hacker News to see how their relative popularity changed over time. Prompts.

Passkey experiment - a UI for trying out the Passkey / WebAuthn APIs that are built into browsers these days. Prompts.

Incomplete JSON Pretty Printer - do you ever find yourself staring at a screen full of JSON that isn't completely valid because it got truncated? This tool will pretty-print it anyway. Prompts.

Bluesky WebSocket Feed Monitor - I found out Bluesky has a Firehose API that can be accessed directly from the browser, so I vibe-coded up this tool to try it out. Prompts.

In putting this list together I realized I wanted to be able to link to the prompts for each tool... but those were hidden inside a collapsed <details><summary> element for each one. So I fired up OpenAI Codex and prompted:

Update the script that builds the colophon.html page such that the generated page has a tiny bit of extra JavaScript - when the page is loaded as e.g. https://tools.simonwillison.net/colophon#jina-reader.html it should notice the #jina-reader.html fragment identifier and ensure that the Development history details/summary for that particular tool is expanded when the page loads.

It authored this PR for me which fixed the problem.

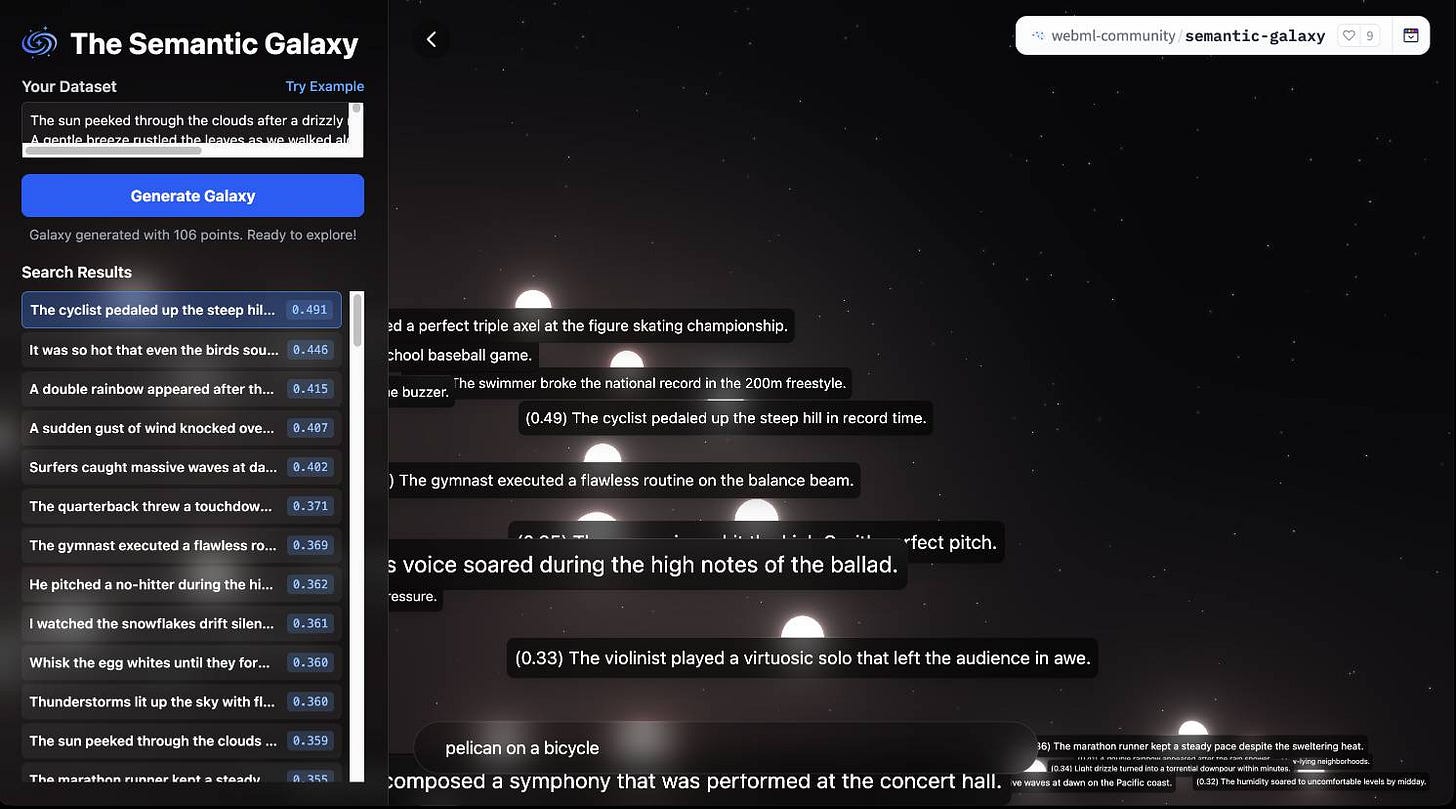

Link 2025-09-04 Introducing EmbeddingGemma:

Brand new open weights (under the slightly janky Gemma license) 308M parameter embedding model from Google:

Based on the Gemma 3 architecture, EmbeddingGemma is trained on 100+ languages and is small enough to run on less than 200MB of RAM with quantization.

It's available via sentence-transformers, llama.cpp, MLX, Ollama, LMStudio and more.

As usual for these smaller models there's a Transformers.js demo (via) that runs directly in the browser (in Chrome variants) - Semantic Galaxy loads a ~400MB model and then lets you run embeddings against hundreds of text sentences, map them in a 2D space and run similarity searches to zoom to points within that space.

quote 2025-09-05

After struggling for years trying to figure out why people think [Cloudflare] Durable Objects are complicated, I'm increasingly convinced that it's just that they sound complicated.

Feels like we can solve 90% of it by renamingDurableObjecttoStatefulWorker?

It's just a worker that has state. And because it has state, it also has to have a name, so that you can route to the specific worker that has the state you care about. There may be a sqlite database attached, there may be a container attached. Those are just part of the state.

Link 2025-09-06 Anthropic to pay $1.5 billion to authors in landmark AI settlement:

I wrote about the details of this case when it was found that Anthropic's training on book content was fair use, but they needed to have purchased individual copies of the books first... and they had seeded their collection with pirated ebooks from Books3, PiLiMi and LibGen.

The remaining open question from that case was the penalty for pirating those 500,000 books. That question has now been resolved in a settlement:

Anthropic has reached an agreement to pay “at least” a staggering $1.5 billion, plus interest, to authors to settle its class-action lawsuit. The amount breaks down to smaller payouts expected to be approximately $3,000 per book or work.

It's wild to me that a $1.5 billion settlement can feel like a win for Anthropic, but given that it's undisputed that they downloaded pirated books (as did Meta and likely many other research teams) the maximum allowed penalty was $150,000 per book, so $3,000 per book is actually a significant discount.

As far as I can tell this case sets a precedent for Anthropic's more recent approach of buying millions of (mostly used) physical books and destructively scanning them for training as covered by "fair use". I'm not sure if other in-flight legal cases will find differently.

To be clear: it appears it is legal, at least in the USA, to buy a used copy of a physical book (used = the author gets nothing), chop the spine off, scan the pages, discard the paper copy and then train on the scanned content. The transformation from paper to scan is "fair use".

If this does hold it's going to be a great time to be a bulk retailer of used books!

Update: The official website for the class action lawsuit is www.anthropiccopyrightsettlement.com:

In the coming weeks, and if the court preliminarily approves the settlement, the website will provide to find a full and easily searchable listing of all works covered by the settlement.

In the meantime the Atlantic have a search engine to see if your work was included in LibGen, one of the pirated book sources involved in this case.

I had a look and it turns out the book I co-authored with 6 other people back in 2007 The Art & Science of JavaScript is in there, so maybe I'm due for 1/7th of one of those $3,000 settlements!

Update 2: Here's an interesting detail from the Washington Post story about the settlement:

Anthropic said in the settlement that the specific digital copies of books covered by the agreement were not used in the training of its commercially released AI models.

quote 2025-09-06

RDF has the same problems as the SQL schemas with information scattered. What fields mean requires documentation.

There - they have a name on a person. What name? Given? Legal? Chosen? Preferred for this use case?

You only have one ID for Apple eh? Companies are complex to model, do you mean Apple just as someone would talk about it? The legal structure of entities that underpins all major companies, what part of it is referred to?

I spent a long time building identifiers for universities and companies (which was taken for ROR later) and it was a nightmare to say what a university even was. What’s the name of Cambridge? It’s not “Cambridge University” or “The university of Cambridge” legally. But it also is the actual name as people use it. [It's The Chancellor, Masters, and Scholars of the University of Cambridge]

The university of Paris went from something like 13 institutes to maybe one to then a bunch more. Are companies locations at their headquarters? Which headquarters?

Someone will suggest modelling to solve this but here lies the biggest problem:

The correct modelling depends on the questions you want to answer.

IanCal, on Hacker News, discussing RDF

Link 2025-09-06 Kimi-K2-Instruct-0905:

New not-quite-MIT licensed model from Chinese Moonshot AI, a follow-up to the highly regarded Kimi-K2 model they released in July.

This one is an incremental improvement - I've seen it referred to online as "Kimi K-2.1". It scores a little higher on a bunch of popular coding benchmarks, reflecting Moonshot's claim that it "demonstrates significant improvements in performance on public benchmarks and real-world coding agent tasks".

More importantly the context window size has been increased from 128,000 to 256,000 tokens.

Like its predecessor this is a big model - 1 trillion parameters in a mixture-of-experts configuration with 384 experts, 32B activated parameters and 8 selected experts per token.

I used Groq's playground tool to try "Generate an SVG of a pelican riding a bicycle" and got this result, at a very healthy 445 tokens/second taking just under 2 seconds total:

quote 2025-09-06

I am once again shocked at how much better image retrieval performance you can get if you embed highly opinionated summaries of an image, a summary that came out of a visual language model, than using CLIP embeddings themselves. If you tell the LLM that the summary is going to be embedded and used to do search downstream. I had one system go from 28% recall at 5 using CLIP to 75% recall at 5 using an LLM summary.

It appears to me that the days of a quiet archivist job are numbered. Of course, archivists can always vibe code their own apps for dealing with LLM inquiries.

“So I fired up OpenAI Codex and prompted”

I noticed that Claude posted the PR, did you mean Claude here or did you have Codex post somehow through Claude?