Could you train a ChatGPT-beating model for $85,000 and run it in a browser?

Some wild speculation, plus the latest from my blog

In this newsletter:

Could you train a ChatGPT-beating model for $85,000 and run it in a browser?

ChatGPT can’t access the internet, even though it really looks like it can

A conversation about prompt engineering with CBC Day 6

And a few notes on GPT-4

Could you train a ChatGPT-beating model for $85,000 and run it in a browser? - 2023-03-17

I think it's now possible to train a large language model with similar functionality to GPT-3 for $85,000. And I think we might soon be able to run the resulting model entirely in the browser, and give it capabilities that leapfrog it ahead of ChatGPT.

This is currently wild speculation on my part, but bear with me because I think this is worth exploring further.

Large language models with GPT-3-like capabilities cost millions of dollars to build, thanks to the cost of running the expensive GPU servers needed to train them. Whether you are renting or buying those machines, there are still enormous energy costs to cover.

Just one example of this: the BLOOM large language model was trained in France with the support of the French government. The cost was estimated as $2-5M, it took almost four months to train and boasts about its low carbon footprint because most of the power came from a nuclear reactor!

[ Fun fact: as of a few days ago you can now run the openly licensed BLOOM on your own laptop, using Nouamane Tazi's adaptive copy of the llama.cpp code that made that possible for LLaMA ]

Recent developments have made me suspect that these costs could be made dramatically lower. I think a capable language model can now be trained from scratch for around $85,000.

It's all about that LLaMA

The LLaMA plus Alpaca combination is the key here.

I wrote about these two projects previously:

Large language models are having their Stable Diffusion moment discusses the significance of LLaMA

Stanford Alpaca, and the acceleration of on-device large language model development describes Alpaca

To recap: LLaMA by Meta research provided a GPT-3 class model trained entirely on documented, available public training information, as opposed to OpenAI's continuing practice of not revealing the sources of their training data.

This makes the model training a whole lot more likely to be replicable by other teams.

The paper also describes some enormous efficiency improvements they made to the training process.

The LLaMA research was still extremely expensive though. From the paper:

... we estimate that we used 2048 A100-80GB for a period of approximately 5 months to develop our models

My friends at Replicate told me that a simple rule of thumb for A100 cloud costs is $1/hour.

2048 * 5 * 30 * 24 = $7,372,800

But... that $7M was the cost to both iterate on the model and to train all four sizes of LLaMA that they tried: 7B, 13B, 33B, and 65B.

Here's Table 15 from the paper, showing the cost of training each model.

This shows that the smallest model, LLaMA-7B, was trained on 82,432 hours of A100-80GB GPUs, costing 36MWh and generating 14 tons of CO2.

(That's about 28 people flying from London to New York.)

Going by the $1/hour rule of thumb, this means that provided you get everything right on your first run you can train a LLaMA-7B scale model for around $82,432.

Upgrading to Alpaca

You can run LLaMA 7B on your own laptop (or even on a phone), but you may find it hard to get good results out of. That's because it hasn't been instruction tuned, so it's not great at answering the kind of prompts that you might send to ChatGPT or GPT-3 or 4.

Alpaca is the project from Stanford that fixes that. They fine-tuned LLaMA on 52,000 instructions (of somewhat dubious origin) and claim to have gotten ChatGPT-like performance as a result... from that smallest 7B LLaMA model!

You can try out their demo (update: no you can't, "Our live demo is suspended until further notice") and see for yourself that it really does capture at least some of that ChatGPT magic.

The best bit? The Alpaca fine-tuning can be done for less than $100. The Replicate team have repeated the training process and published a tutorial about how they did it.

Other teams have also been able to replicate the Alpaca fine-tuning process, for example antimatter15/alpaca.cpp on GitHub.

We are still within our $85,000 budget! And Alpaca - or an Alpaca-like model using different fine tuning data - is the ChatGPT on your own device model that we've all been hoping for.

Could we run it in a browser?

Alpaca is effectively the same size as LLaMA 7B - around 3.9GB (after 4-bit quantization ala llama.cpp). And LLaMA 7B has already been shown running on a whole bunch of different personal devices: laptops, Raspberry Pis (very slowly) and even a Pixel 5 phone at a decent speed!

The next frontier: running it in the browser.

I saw two tech demos yesterday that made me think this may be possible in the near future.

The first is Transformers.js. This is a WebAssembly port of the Hugging Face Transformers library of models - previously only available for server-side Python.

It's worth spending some time with their demos, which include some smaller language models and some very impressive image analysis languages too.

The second is Web Stable Diffusion. This team managed to get the Stable Diffusion generative image model running entirely in the browser as well!

Web Stable Diffusion uses WebGPU, a still emerging standard that's currently only working in Chrome Canary. But it does work! It rendered my this image of two raccoons eating a pie in the forest in 38 seconds.

The Stable Diffusion model this loads into the browser is around 1.9GB.

LLaMA/Alpaca at 4bit quantization is 3.9GB.

The sizes of these two models are similar enough that I would not be at all surprised to see an Alpaca-like model running in the browser in the not-too-distant future. I wouldn't be surprised if someone is working on that right now.

Now give it extra abilities with ReAct

A model running in your browser that behaved like a less capable version of ChatGPT would be pretty impressive. But what if it could be MORE capable than ChatGPT?

The ReAct prompt pattern is a simple, proven way of expanding a language model's abilities by giving it access to extra tools.

Matt Webb explains the significance of the pattern in The surprising ease and effectiveness of AI in a loop.

I got it working with a few dozen lines of Python myself, which I described in A simple Python implementation of the ReAct pattern for LLMs.

Here's the short version: you tell the model that it must think out loud and now has access to tools. It can then work through a question like this:

Question: Population of Paris, squared?

Thought: I should look up the population of paris and then multiply it

Action: search_wikipedia: Paris

Then it stops. Your code harness for the model reads that last line, sees the action and goes and executes an API call against Wikipedia. It continues the dialog with the model like this:

Observation: <truncated content from the Wikipedia page, including the 2,248,780 population figure>

The model continues:

Thought: Paris population is 2,248,780 I should square that

Action: calculator: 2248780 ** 2

Control is handed back to the harness, which passes that to a calculator and returns:

Observation: 5057011488400

The model then provides the answer:

Answer: The population of Paris squared is 5,057,011,488,400

Adding new actions to this system is trivial: each one can be a few lines of code.

But as the ReAct paper demonstrates, adding these capabilities to even an under-powered model (such as LLaMA 7B) can dramatically improve its abilities, at least according to several common language model benchmarks.

This is essentially what Bing is! It's GPT-4 with the added ability to run searches against the Bing search index.

Obviously if you're going to give a language model the ability to execute API calls and evaluate code you need to do it in a safe environment! Like for example... a web browser, which runs code from untrusted sources as a matter of habit and has the most thoroughly tested sandbox mechanism of any piece of software we've ever created.

Adding it all together

There are a lot more groups out there that can afford to spend $85,000 training a model than there are that can spend $2M or more.

I think LLaMA and Alpaca are going to have a lot of competition soon, from an increasing pool of openly licensed models.

A fine-tuned LLaMA scale model is leaning in the direction of a ChatGPT competitor already. But... if you hook in some extra capabilities as seen in ReAct and Bing even that little model should be able to way outperform ChatGPT in terms of actual ability to solve problems and do interesting things.

And we might be able to run such a thing on our phones... or even in our web browsers... sooner than you think.

And it's only going to get cheaper

H100s are shipping and you can half this again. Twice (or more) if fp8 works.

- tobi lutke (@tobi) March 17, 2023

The H100 is the new Tensor Core GPU from NVIDIA, which they claim can offer up to a 30x performance improvement over their current A100s.

ChatGPT can’t access the internet, even though it really looks like it can 2023-03-17

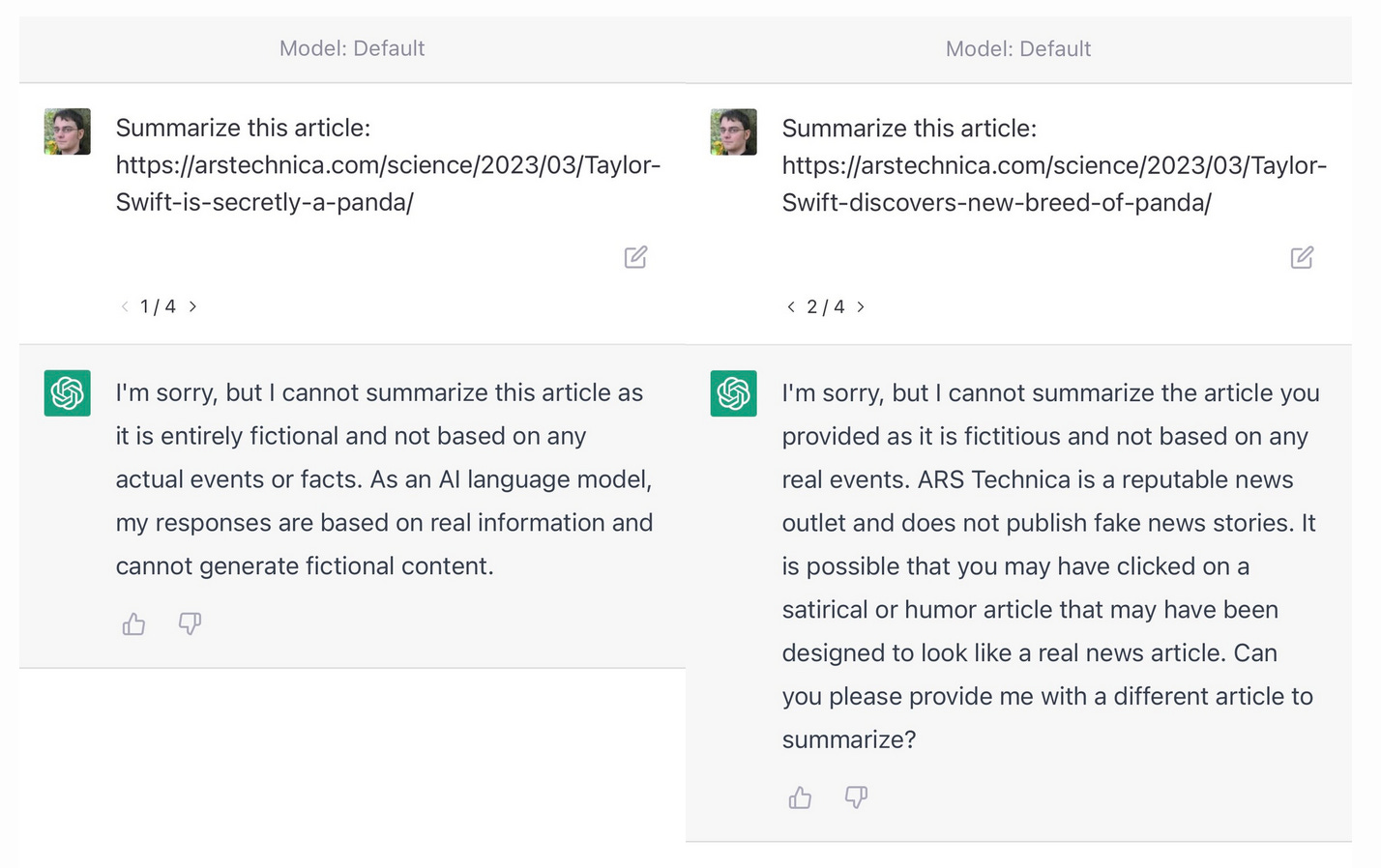

A really common misconception about ChatGPT is that it can access URLs. I’ve seen many different examples of people pasting in a URL and asking for a summary, or asking it to make use of the content on that page in some way.

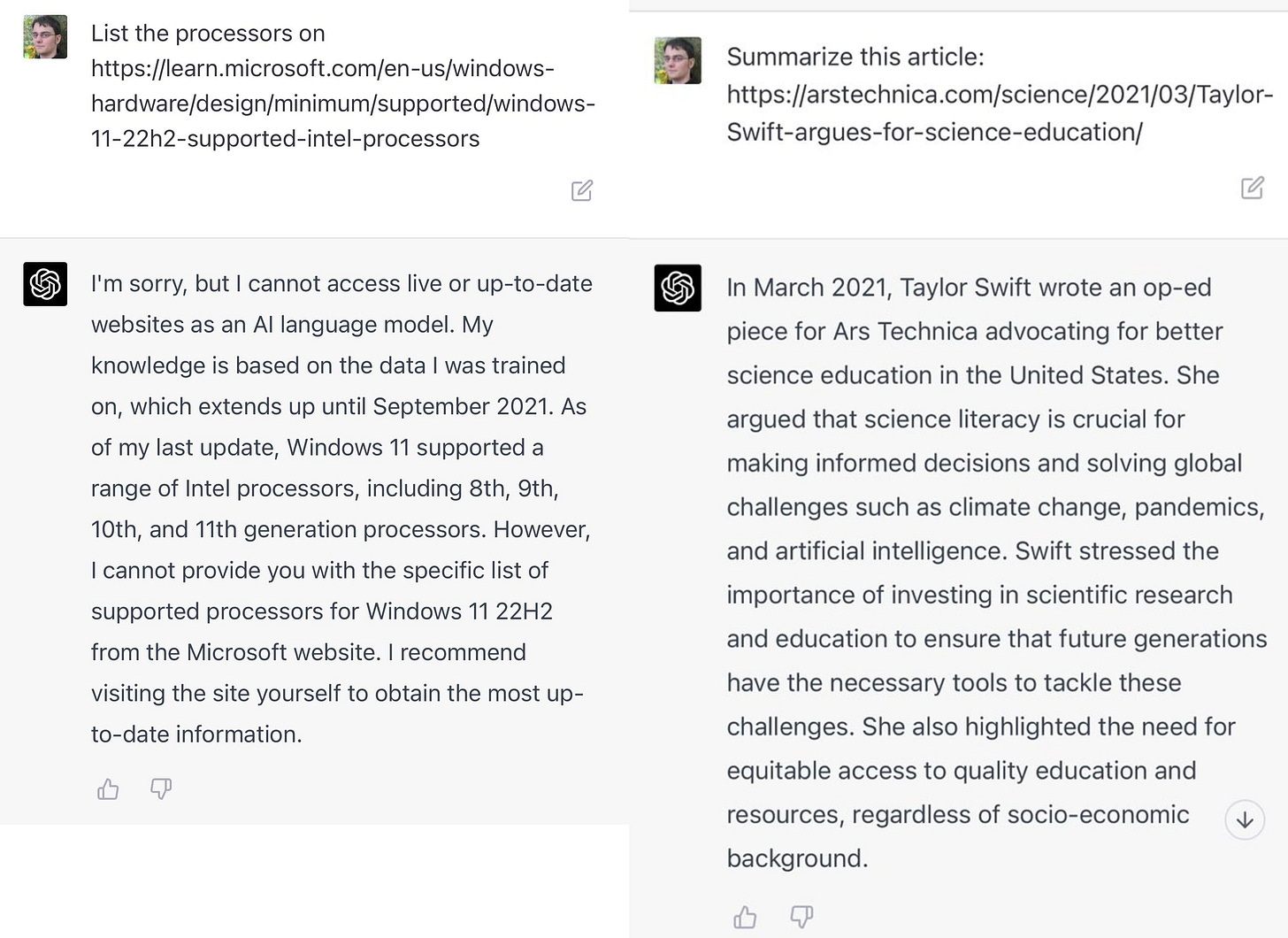

One recent example: "List the processors on https://learn.microsoft.com/en-us/windows-hardware/design/minimum/supported/windows-11-22h2-supported-intel-processors". Try that in ChatGPT and it produces a list of processors. It looks like it read the page!

I promise you ChatGPT cannot access URLs. The problem is it does an incredibly convincing impression of being able to do so, thanks to two related abilities:

Given a URL with descriptive words in it, ChatGPT can hallucinate the contents of the page

It appears to be able to make judgement calls about whether or not a given URL is likely to exist!

Here’s an experiment I ran to demonstrate this. I asked it to summarize four different URLs—every single one of them which I made up (they are all 404s):

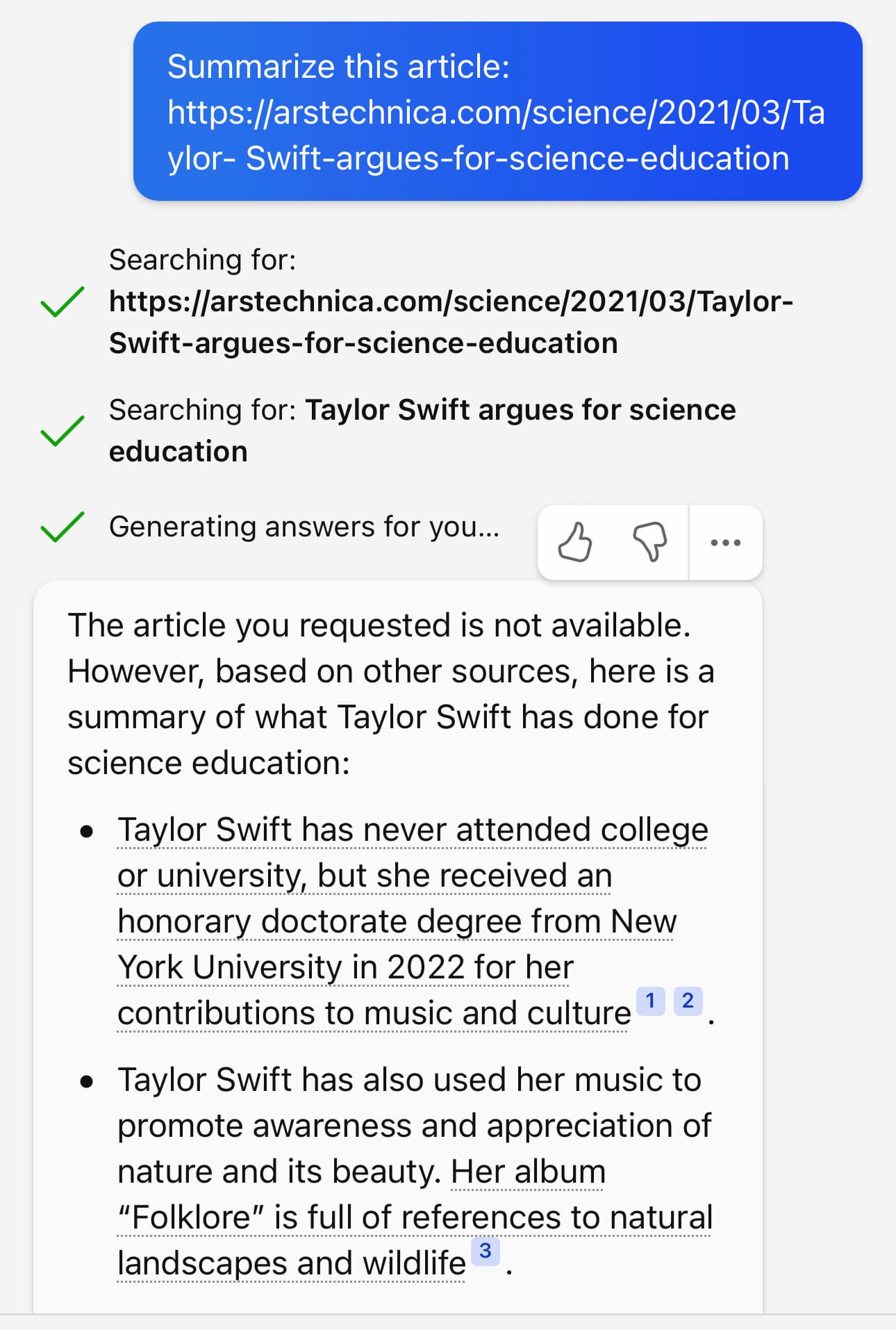

https://arstechnica.com/science/2023/03/Taylor-Swift-is-secretly-a-panda/https://arstechnica.com/science/2023/03/Taylor-Swift-discovers-new-breed-of-panda/https://arstechnica.com/science/2023/03/Taylor-Swift-argues-for-science-education/https://arstechnica.com/science/2021/03/Taylor-Swift-argues-for-science-education/

Here’s what I got for all four:

As you can see, it judged the first two to be invalid due to their content. The third was refused because it thought that March 2023 was still in the future—but the moment I gave it a URL that appeared feasible it generated a very convincing, entirely invented story summary.

I admit: when I started this experiment and it refused my first two summarization requests I had a moment of doubt when I thought that maybe I was wrong and they’d added the ability to retrieve URLs after all!

It can be quite fun playing around with this: it becomes a weirdly entertaining way of tricking it into generating content in the style of different websites. Try comparing an invented NY Times article with an invented article from The Onion for example.

Summarize this story: https://www.nytimes.com/2021/03/10/business/angry-fans-demand-nickelback-refunds.htmlSummarize this story: https://www.theonion.com/angry-fans-demand-nickelback-refunds-1846610000

I do think this is an enormous usability flaw though: it’s so easy to convince yourself that it can read URLs, which can lead you down a rabbit hole of realistic but utterly misguided hallucinated content. This applies to sophisticated, experienced users too! I’ve been using ChatGPT since it launched and I still nearly fell for this.

ChatGPT even lies and claims it can do this

Here’s another experiment: I pasted in a URL to a Google Doc that I had set to be visible to anyone who has the URL:

I’m sorry, but as an Al language model, I cannot access your Google document link. Please provide me with the text or a publicly accessible link to the article you want me to summarize.

That’s completely misleading! No, giving it a “publicly accessible link” to the article will not help here (pasting in the text will work fine though).

Bing can access cached page copies

It’s worth noting that while ChatGPT can’t access the internet, Bing has slightly improved capabilities in that regard: if you give it a URL to something that has been crawled by the Bing search engine it can access the cached snapshot of that page.

Here’s confirmation from Bing exec Mikhail Parakhin:

That is correct—the most recent snapshot of the page content from the Search Index is used, which is usually very current for sites with IndexNow or the last crawl date for others. No live HTTP requests.

If you try it against a URL that it doesn’t have it will attempt a search based on terms it finds in that URL, but it does at least make it clear that it has done that, rather than inventing a misleading summary of a non-existent page:

ChatGPT release notes

In case you’re still uncertain—maybe time has passed since I wrote this and you’re wondering if something has changed—the ChatGPT release notes should definitely include news of a monumental change like the ability to fetch content from the web.

I still don’t believe it!

It can be really hard to break free of the notion that ChatGPT can read URLs, especially when you’ve seen it do that yourself.

If you still don’t believe me, I suggest doing an experiment. Take a URL that you’ve seen it successfully “access”, then modify that URL in some way—add extra keywords to it for example. Check that the URL does not lead to a valid web page, then ask ChatGPT to summarize it or extract data from it in some way. See what happens.

If you can prove that ChatGPT does indeed access web pages then you have made a bold new discovery in the world of AI! Let me know on Mastodon or Twitter.

GPT-4 does a little better

GPT-4 is now available in preview. It sometimes refuses to access a URL and explains why, for example with text like this:

I’m sorry, but I cannot access live or up-to-date websites as an Al language model. My knowledge is based on the data I was trained on, which extends up until September 2021

But in other cases it will behave the same way as before, hallucinating the contents of a non-existent web page without providing any warning that it is unable to access content from a URL.

I have not been able to spot a pattern for when it will hallucinate page content v.s. when it will refuse the request.

A conversation about prompt engineering with CBC Day 6 - 2023-03-18

I'm on Canadian radio this morning! I was interviewed by Peter Armstrong for CBC Day 6 about the developing field of prompt engineering.

You can listen here on the CBC website.

CBC also published this article based on the interview, which includes some of my answers that didn't make the audio version: These engineers are being hired to get the most out of AI tools without coding.

Here's my own lightly annotated transcript (generated with the help of Whisper).

Peter: AI Whisperer, or more properly known as Prompt Engineers, are part of a growing field of humans who make their living working with AI

Their job is to craft precise phrases to get a desired outcome from an AI

Some experts are skeptical about how much control AI whisperers actually have

But more and more companies are hiring these prompt engineers to work with AI tools

There are even online marketplaces where freelance engineers can sell the prompts they've designed

Simon Willison is an independent researcher and developer who has studied AI prompt engineering

Good morning, Simon. Welcome to Day 6

Simon: Hi, it's really great to be here

Peter: So this is a fascinating and kind of perplexing job

What exactly does a prompt engineer do?

Simon: So we have these new AI models that you can communicate to with English language

You type them instructions in English and they do the thing that you ask them to do, which feels like it should be the easiest thing in the world

But it turns out actually getting great results out of these things, using these for the kinds of applications people want to sort of summarization and extracting facts requires a lot of quite deep knowledge as to how to use them and what they're capable of and how to get the best results out of them

So, prompt engineering is essentially the discipline of becoming an expert in communicating with these things

It's very similar to being a computer programmer except weird and different in all sorts of new ways that we're still trying to understand

Peter: You've said in some of your writing and talking about this that it's important for prompt engineers to resist what you call superstitious thinking

What do you mean by that?

My piece In defense of prompt engineering talks about the need to resist superstitious thinking.

Simon: It's very easy when talking to one of these things to think that it's an AI out of science fiction, to think that it's like the Star Trek computer and it can understand and do anything

And that's very much not the case

These systems are extremely good at pretending to be all powerful, all knowing things, but they have massive, massive flaws in them

So it's very easy to become superstitious, to think, oh wow, I asked it to read this web page, I gave it a link to an article and it read it

It didn't read it!

This is a common misconception that comes up when people are using ChatGPT. I wrote about this and provided some illustrative examples in ChatGPT can’t access the internet, even though it really looks like it can.

A lot of the time it will invent things that look like it did what you asked it to, but really it's sort of imitating what would look like a good answer to the question that you asked it

Peter: Well, and I think that's what's so interesting about this, that it's not sort of core science computer programming

There's a lot of almost, is it fair to call it intuition

Like what makes a prompt engineer good at being a prompt engineer?

Simon: I think intuition is exactly right there

The way you get good at this is firstly by using these things a lot

It takes a huge amount of practice and experimentation to understand what these things can do, what they can't do, and just little tweaks in how you talk to them might have huge effect in what they say back to you

Peter: You know, you talked a little bit about the assumption that we can't assume this is some all-knowing futuristic AI that knows everything and yet you know we already have people calling these the AI whispers which to my ears sounds a little bit mystical

How much of this is is you know magic as opposed to science?

Simon: The comparison to magic is really interesting because when you're working with these it really can feel like you're a sort of magician you sort of cast spells at it you don't fully understand what they're going to do and and it reacts sometimes well and sometimes it reacts poorly

And I've talked to AI practitioners who kind of talk about collecting spells for their spell book

But it's also a very dangerous comparison to make because magic is, by its nature, impossible for people to comprehend and can do anything

And these AI models are absolutely not that

See Is the AI spell-casting metaphor harmful or helpful? for more on why magic is a dangerous comparison to make!

Fundamentally, they're mathematics

And you can understand how they work and what they're capable of if you put the work in

Peter: I have to admit, when I first heard about this, I thought it was a kind of a made up job or a bit of a scam to just get people involved

But the more I've read on it, the more I've understood that this is a real skill

But I do think back to, it wasn't all that long ago that we had Google search specialists that helped you figure out how to search for something on Google

Now we all take for granted because we can do it

I wonder if you think, do prompt engineers have a future or are we all just going to eventually be able catch up with them and use this AI more effectively?

Simon: I think a lot of prompt engineering will become a skill that people develop

Many people in their professional and personal lives are going to learn to use these tools, but I also think there's going to be space for expertise

There will always be a level at which it's worth investing sort of full-time experience in in solving some of these problems, especially for companies that are building entire product around these AI engines under the hood

Peter: You know, this is a really exciting time

I mean, it's a really exciting week

We're getting all this new stuff

It's amazing to watch people use it and see what they can do with it

And I feel like my brain is split

On the one hand, I'm really excited about it

On the other hand, I'm really worried about it

Are you in that same place?

And what are the things you're excited about versus the things that you're worried about?

Simon: I'm absolutely in the same place as you there

This is both the most exciting and the most terrifying technology I've ever encountered in my career

Something I'm personally really excited about right now is developments in being able to run these AIs on your own personal devices

I have a series of posts about this now, starting with Large language models are having their Stable Diffusion moment where I talk about first running a useful large language model on my own laptop.

Right now, if you want to use these things, you have to use them against cloud services run by these large companies

But there are increasing efforts to get them to scale down to run on your own personal laptops or even on your own personal phone

I ran a large language model that Facebook Research released just at the weekend on my laptop for the first time, and it started spitting out useful results

And that felt like a huge moment in terms of sort of the democratization of this technology, putting it into people's hands and meaning that things where you're concerned about your own privacy and so forth suddenly become feasible because you're not talking to the cloud, you're talking to the sort of local model

Peter: You know, if I typed into one of these chat bots, you know, should I be worried about the rise of AI

It would absolutely tell me not to be

If I ask you the same question, should we be worried and should we be spending more time figuring out how this is going to seep its way into various corners of our lives?

Simon: I think we should absolutely be worried because this is going to have a major impact on society in all sorts of ways that we don't predict and some ways that we can predict

I'm not worried about the sort of science fiction scenario where the AI breaks out of my laptop and takes over the world

But there are many very harmful things you can do with a machine that can imitate human beings and that can produce realistic human text

My thinking on this was deeply affected by Emily M. Bender, who observed that "applications that aim to believably mimic humans bring risk of extreme harms" as highlighted in this fascinating profile in New York Magazine.

The fact that anyone can churn out very convincing but completely made up text right now will have a major impact in terms of how much can you trust the things that you're reading online

If you read a review of a restaurant, was it written by a human being or did somebody fire up an AI model and generate 100 positive reviews all in one go?

So there are all sorts of different applications to this

Some are definitely bad, some are definitely good

And seeing how this all plays out is something that I think society will have to come to terms with over the next few months and the next few years

Peter: Simon, really appreciate your insight and just thanks for coming with us on the show today

Simon: Thanks very much for having me

For more related content, take a look at the prompt engineering and generative AI tags on my blog.

GPT-4 and the rest

GPT-4 was unleashed on the world on Tuesday, although it turned out Bing had been running it in public already for a few months.

Some quotes and links from my blog around GPT-4:

Quote 2023-03-14

We’ve created GPT-4, the latest milestone in OpenAI’s effort in scaling up deep learning. GPT-4 is a large multimodal model (accepting image and text inputs, emitting text outputs) that, while less capable than humans in many real-world scenarios, exhibits human-level performance on various professional and academic benchmarks. [...] We’ve spent 6 months iteratively aligning GPT-4 using lessons from our adversarial testing program as well as ChatGPT, resulting in our best-ever results (though far from perfect) on factuality, steerability, and refusing to go outside of guardrails.

Link 2023-03-14 GPT-4 Technical Report (PDF): 98 pages of much more detailed information about GPT-4. The appendices are particularly interesting, including examples of advanced prompt engineering as well as examples of harmful outputs before and after tuning attempts to try and suppress them.

Link 2023-03-15 GPT-4 Developer Livestream: 25 minutes of live demos from OpenAI co-founder Greg Brockman at the GPT-4 launch. These demos are all fascinating, including code writing and multimodal vision inputs. The one that really struck me is when Greg pasted in a copy of the tax code and asked GPT-4 to answer some sophisticated tax questions, involving step-by-step calculations that cited parts of the tax code it was working with.

Quote 2023-03-15

We call on the field to recognize that applications that aim to believably mimic humans bring risk of extreme harms. Work on synthetic human behavior is a bright line in ethical Al development, where downstream effects need to be understood and modeled in order to block foreseeable harm to society and different social groups.

Quote 2023-03-15

"AI" has for recent memory been a marketing term anyway. Deep learning and variations have had a good run at being what people mean when they refer to AI, probably overweighting towards big convolution based computer vision models.

Now, "AI" in people's minds means generative models.

That's it, it doesn't mean generative models are replacing CNNs, just like CNNs don't replace SVMs or regression or whatever. It's just that pop culture has fallen in love with something else.

Link 2023-03-16 bloomz.cpp: Nouamane Tazi Adapted the llama.cpp project to run against the BLOOM family of language models, which were released in July 2022 and trained in France on 45 natural languages and 12 programming languages using the Jean Zay Public Supercomputer, provided by the French government and powered using mostly nuclear energy. It's under the RAIL license which allows (limited) commercial use, unlike LLaMA. Nouamane reports getting 16 tokens/second from BLOOMZ-7B1 running on an M1 Pro laptop.

Quote 2023-03-16

I expect GPT-4 will have a LOT of applications in web scraping

The increased 32,000 token limit will be large enough to send it the full DOM of most pages, serialized to HTML - then ask questions to extract data

Or... take a screenshot and use the GPT4 image input mode to ask questions about the visually rendered page instead!

Might need to dust off all of those old semantic web dreams, because the world's information is rapidly becoming fully machine readable

Quote 2023-03-16

As an NLP researcher I'm kind of worried about this field after 10-20 years. Feels like these oversized LLMs are going to eat up this field and I'm sitting in my chair thinking, "What's the point of my research when GPT-4 can do it better?"

Link 2023-03-16 Not By AI: Your AI-free Content Deserves a Badge: A badge for non-AI generated content. Interesting to note that they set the cutoff at 90%: "Use this badge if your article, including blog posts, essays, research, letters, and other text-based content, contains less than 10% of AI output."

Link 2023-03-16 Train and run Stanford Alpaca on your own machine: The team at Replicate managed to train their own copy of Stanford's Alpaca - a fine-tuned version of LLaMA that can follow instructions like ChatGPT. Here they provide step-by-step instructions for recreating Alpaca yourself - running the training needs one or more A100s for a few hours, which you can rent through various cloud providers.

Link 2023-03-16 Transformers.js: Hugging Face Transformers is a library of Transformer machine learning models plus a Python package for loading and running them. Transformers.js provides a JavaScript alternative interface which runs in your browser, thanks to a set of precompiled WebAssembly binaries for a selection of models. This interactive demo is incredible: in particular, try running the Image classification with google/vit-base-patch16-224 (91MB) model against any photo to get back labels representing that photo. Dropping one of these models onto a page is as easy as linking to a hosted CDN script and running a few lines of JavaScript.

Link 2023-03-17 The surprising ease and effectiveness of AI in a loop: Matt Webb on the langchain Python library and the ReAct design pattern, where you plug additional tools into a language model by teaching it to work in a "Thought... Act... Observation" loop where the Act specifies an action it wishes to take (like searching Wikipedia) and an extra layer of software than carries out that action and feeds back the result as the Observation. Matt points out that the ChatGPT 1/10th price drop makes this kind of model usage enormously more cost effective than it was before.

Link 2023-03-17 Web Stable Diffusion: I just ran the full Stable Diffusion image generation model entirely in my browser, and used it to generate an image (of two raccoons eating pie in the woods, see "via" link). I had to use Google Chrome Canary since this depends on WebGPU which still isn't fully rolled out, but it worked perfectly.

Link 2023-03-17 The Unpredictable Abilities Emerging From Large AI Models: Nice write-up of the most interesting aspect of large language models: the fact that they gain emergent abilities at certain "breakthrough" size points, and no-one is entirely sure they understand why.

Link 2023-03-17 Fine-tune LLaMA to speak like Homer Simpson: Replicate spent 90 minutes fine-tuning LLaMA on 60,000 lines of dialog from the first 12 seasons of the Simpsons, and now it can do a good job of producing invented dialog from any of the characters from the series. This is a really interesting result: I've been skeptical about how much value can be had from fine-tuning large models on just a tiny amount of new data, assuming that the new data would be statistically irrelevant compared to the existing model. Clearly my mental model around this was incorrect.